It’s super simple: In order to be successful with mobile marketing, you have to know the science behind testing.

Testing is being used throughout the mobile marketing funnel, for UA, Product, and ASO.

As a person that is in the weeds of ASO, you face a unique challenge. Other digital marketers that are tasked with improving growth metrics usually control the environment they’re trying to optimize. This could be a website or even an app where they can install analytics solutions, measure how users behave and respond to changes, and act accordingly, hopefully driving improvements in the KPIs they try to move.

As an ASO person, you’re tasked with driving improvements on an asset you don’t even own, your app store page(s).

This means that it’s impossible to run native tests that are comparable to those tests “other” marketers run. And these tests are your only tool to drive ongoing improvements, so getting them right is key to getting your job done and growing.

Get into the science of it, but just enough

Don’t make the mistake of diving too deep into the science of testing. As with everything in life, it’s important to know where to invest in to make the maximum impact, and where getting that extra mile will have diminishing returns. This is exactly where you should apply the Pareto principle, well, sort of, and invest 20% of the time it requires to get to 80% of the expertise level you need. Trying to get to that 100% expertise level will deter you from doing the things you really want to focus on to maximize your growth.

That’s why we got you covered with this handbook, busting myths and uncovering some truths about the wild wild west which is the app store page testing world.

This is the 20%. That’s my promise to you if you read through this guide.

What is A/B/n Testing?

How does an A/B Test Usually Work?

An A/B test is a randomized experiment (in the sense that people in the sample – in our case app store visitors – are assigned different variants at random). The core of the test is to compare two variants and to perform statistical hypotheses testing using those variants.

Throughout this piece, we will look into one of the most beloved characters ever written for TV, Breaking Bad’s Walter White, for reference, and to have some fun. We like to think of our data scientists here at Storemaven as geniuses who have the same level of expertise as Mr. White, albeit a lesser degree of self-destructive behavior.

In our example, Mr. White is wondering along with his partner/mentee Jessie, whether blue meth will get more buyers than white meth. As a science teacher, Mr. White will want to run an A/B test and decides that the best way for this is to set up two variations to sell – blue and white, on his darknet website.

His null hypothesis is that “users” will be indifferent to the color of the meth.

Meaning that – H0: The conversion rate of the blue meth version is equal to the white meth version.

However, Jessie tells him that blue meth “is the bomb”. So he forms his alternative hypothesis.

H1: The conversion rate of the ‘blue meth’ is different than the white version.

Variant 1: The homepage will show simple white meth.

Variant 2: The homepage will show and discuss blue meth.

To run a proper A/B test Mr. White knows he needs to do something very important – to decide on how many people to sample throughout the test, or in other words, set the sample size in advance. Without doing so Mr. White won’t be able to know when it will be valid to stop the test and perform statistical inference.

We need to set the sample size in advance so that we will have a stopping rule that’s independent of the observations we get. You can always collect more samples and the results of the test will change. There’s a very strong relationship between the sample size and the test result.

So how should Mr. White determine the sample size?

He first needs to determine these factors:

- The significance level for the experiment

Reading this article, we can assume that you are no stranger to testing, statistics and probably heard the term “statistical significance” before.

Bear with us. In simplified terms, significance level is a threshold for the probability to reject the null hypothesis (Users are indifferent between the blue and white meth) when it was actually correct.

In other words, in case that you concluded that the blue meth is significantly better, or worse, you want the chance that you’re wrong to be 5% or less. That’s how most people in this field operate – the 5% significance level.

There are a ton of issues with choosing a 5% significance level we won’t go into, but the reality is that it is absurd. The 5% was given as an example in a famous book by Sir Ronald Fisher and was never meant to serve as a yardstick for all statistical tests out there. Why shouldn’t that number be 3%? 2%? 1%? Using the same sample data, you might get different results based on this number. Let’s dive a bit deeper:

- The Minimum Detectable Effect (MDE)

The MDE is the minimum change from the baseline you are willing to accept as an actual change. This means that if your baseline conversion rate is 10%, and you want to treat ‘a change’ as at least a 5% increase or decrease, you’ll treat the range between 9.5% to 10.5% (0.5%, which is 5% of 10) as indistinguishable from the baseline.

- The Baseline Conversion Rate

The baseline conversion rate of the control, simply the “actual” conversion rate that the control is showing.

- The Statistical Power

The test statistical power is the probability of detecting at least the difference we just specified as the MDE, assuming one exists. The lower the power the lower the chance we’ll accidentally conclude that there isn’t a difference between white meth and blue meth, while in fact there is.

The (first) big problem with A/B tests

That’s one of the most common mistakes with A/B tests – Tests that are being run without a clear and fixed sample size in advance. Without one, there’s no clear stopping rule to the test, and the moment in time you decide to conclude the test dramatically affects the result you’ll get. It’s basically cherry-picking and allowing your intentions to distort the results of the test (As you can look at the results and terminate the test when they are convenient).

Rejecting the null hypothesis (and finding a winner in the A/B test) requires calculating a P-Value (which stands for the probability to get a more “extreme” result than the one you got). If it is lower than the significance level, the null hypothesis is rejected.

Mathematically, collecting more samples will decrease the sample variance, which will lead to a lower P-Value. So simply by waiting and letting more data flow in, you are artificially making it easier to reject the null hypothesis.

The (second) big problem with A/B tests

“Yo Mr. White isn’t that a lot of things for you to know. Like.. assume before we, you know, actually know anything?”

“Yes, Jessie!”

The criteria you need to set upfront is anyone’s guess. First of all, in most cases, you don’t really know the baseline conversion. Second of all, the Significance level and Power of the tests are abstract numbers that can be set differently by different people, depending on their preferences. If different people look at the same data, using the same method but with different parameters, and get to different conclusions, well, what did we do there?

This opens up a can of worms that allows test planners/managers to almost guarantee a result that’ll suit them by increasing the likelihood of false positives or decreasing the one for true negatives (by playing with the significance and power). Let’s continue with our example:

So, by using 5% as the significance level, 80% as the power, 10% as the baseline conversion rate, and 3% as the MDE, Mr. White calculated that he needs 157,603 samples per variation. Luckily Mr. White has a ton of traffic and can get that in a week. The test has been running for a week and only after it reached 157,603 samples per variation, Mr. White closed it.

Test results and arriving at a conclusion

The results showed:

According to

- White Meth Variation = 12.04% conversion rate

- Blue Meth Variation = 12.86% conversion rate

Mr. White then ran a proportion difference test and calculated the test P-Value. After that, he checked to see if the P-Value is higher or lower than the test threshold.

As the P-Value was lower than 0.1%, he rejected the null hypothesis and concluded that there is a clear user preference and that blue meth does carry a higher conversion rate than white meth.

The result allowed him to reject the null hypothesis (that there’s no difference) and accept that blue meth has a higher conversion rate. Mr. White went on and applied the blue meth variation on the homepage and waited to see the cash rolls in. Not so fast (said Hank…).

A/B testing isn’t the only way to go, Bayesian Inference can help

“Yo, Mr. White yo,” said Jessie with a mouth full of smoke, “Didn’t you teach us about a different statistics guy that said P-Value is bullshit?”

“A statistics guy?!?! Jessie!”

“Yeah, what was his name, c’mon. Oh! Vase, I remember it was Vase! What about him?”

“Bayes Jessie, Bayes”

Jessie’s comment got Mr. White to start pondering about the test results. “Am I sure that the results are right? Could they be false positives?”.

He remembered an example he used to explain the difference to students:

“Imagine that you’ve lost your phone but you know for certain it’s somewhere in your house. Suddenly you hear it ringing in one of the five rooms. You know from past experiences that you often leave your phone in your bedroom”… What now? There are two options:

Frequentist Reasoning

A frequentist approach would require you to stand still and listen to your phone rings, hoping that you can tell with enough certainty from where you’re standing (without moving!) which room it’s in. And, by the way, you wouldn’t be allowed to use that knowledge about where you usually leave your phone (in the bedroom).

Bayesian Reasoning

A Bayesian approach is well aligned with our common sense. First, you know you often leave your phone in your bedroom, so you have an increased chance of finding it there, and you’re allowed to use that knowledge. Secondly, each time the phone rings, you’re allowed to walk a bit closer to where you think the phone is. Your chances of finding your phone quickly are much higher.

In the Frequentist way of thinking there are simply data collected through a test and then some inference based just on these data to get to a conclusion.

In the Bayesian way of thinking, there is no “test”. There’s simply the collection of data and the update of your prior starting point on how these data are distributed. Then you use an analysis of the model that resulted from this process to make conclusions.

After checking the revenues from the past two days after he implemented the blue meth on his homepage, Mr. White saw they didn’t really change much compared to the previous period. He decided to check again himself and run another experiment, this time based on the Bayesian approach.

Since this approach relies heavily on updated data, he did not need to set arbitrary parameters to run the test. He decided to account for the daily variation, so every day at the same time he looked at the results and updated the calculated distribution of each CVR.

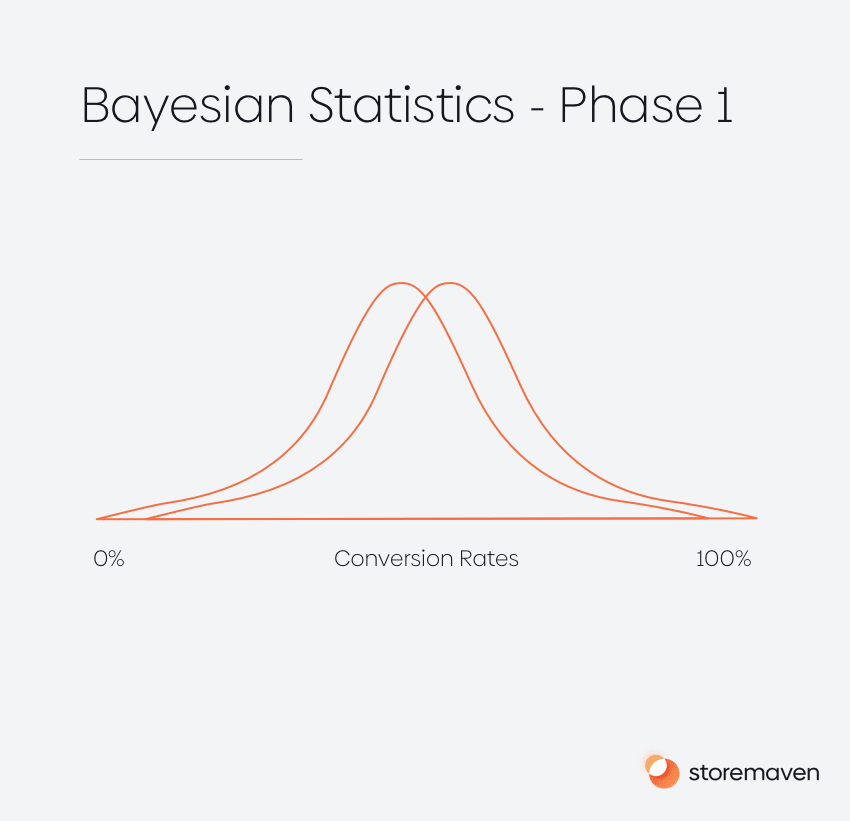

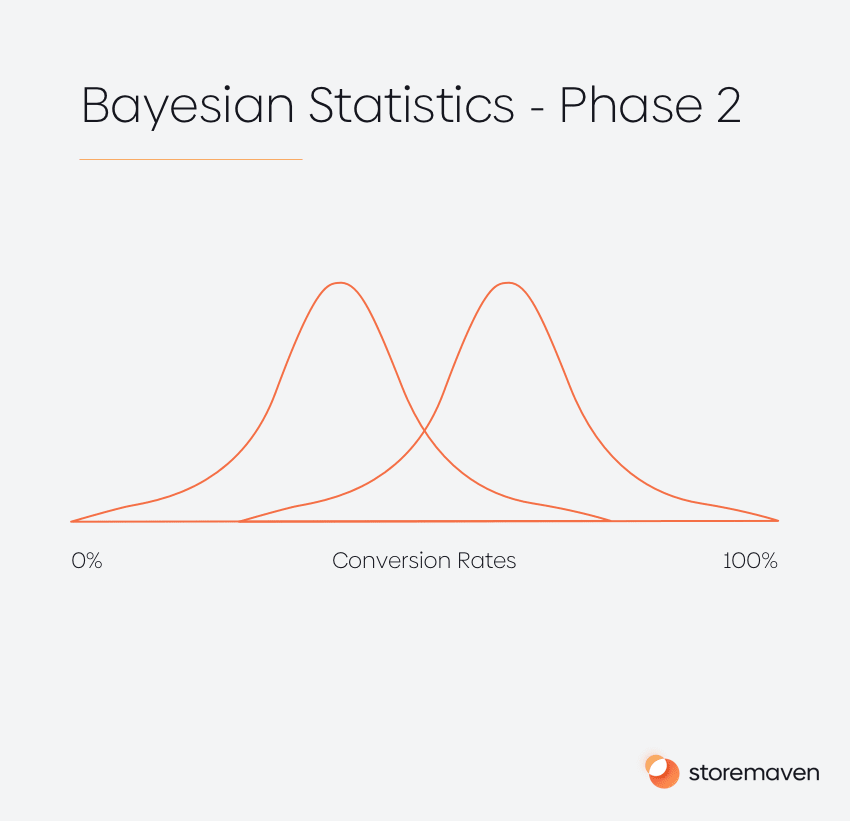

Instead of collecting 157,603 samples per variations and then stopping the test, calculating the P-Value and then comparing it to the chosen significance level, Mr. White’s Bayesian approach guided him to basically draw out the distribution of each variant CVR (phase 1), and with new data coming in every day he updated the distribution (phase 2) to make it a more accurate reflection of the data he observed.

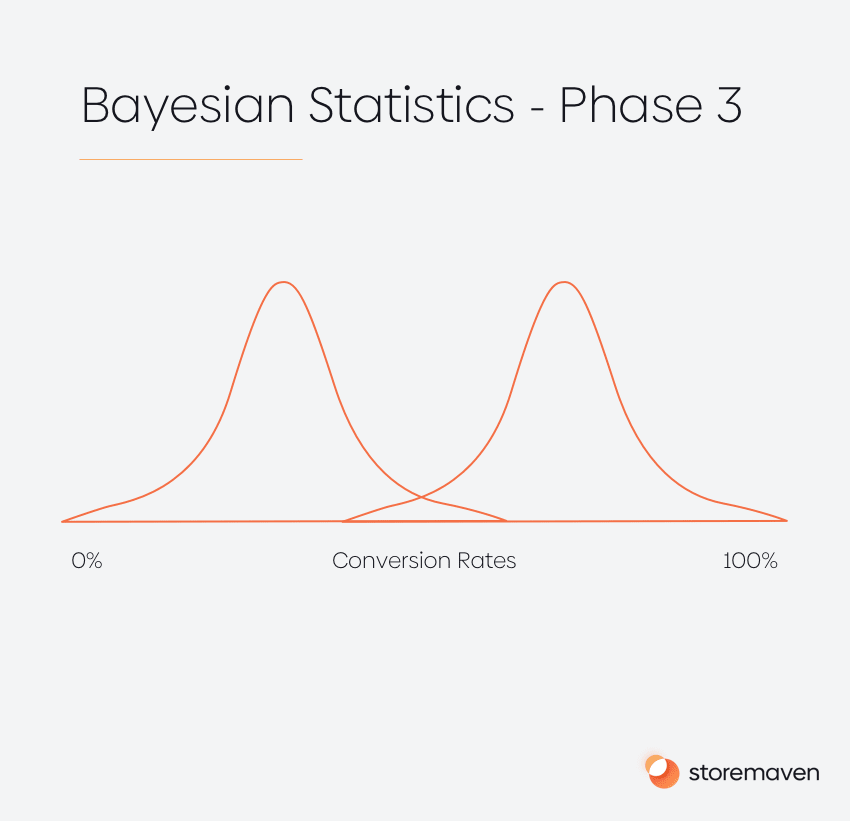

As the conversion rate distribution curves started to appear more distinct, he wanted to make a conclusion. After a week worth of data he could see the following situation (phase 3):

It seems that there is a very low chance of the white variation converting at a higher rate than the blue meth version. In fact, the chance of it happening is less than 1%, and it likely may not convert more than 2% higher than the blue version.

Satisfied by these results, Mr. White closed the test, implemented the blue variation and started to track revenues.

Jessie and Mr. White drove to the horizon happy with the results. Mr. White calculated that they generated $1.4M more than what they would’ve generated if they would be stuck to the white meth version.

They drove into the parking lot of “El Pollos Harmanos” satisfied and rich.

In the words of Jesse: “Yeah! Science B****!”

The 4 main testing methods in the App Store market today – which should you use?

Let’s leave Mr. White and Jessie to their known fate and get back into our world of app store testing. There are four main ways to test app store pages today:

- 3rd party tools that use classical A/B testing, the kind we described in the first example, where a two-sided hypothesis was performed to conclude the winner.

- 3rd party tools that use sequential testing, an alternative A/B testing approach that tries to identify big-winners early on.

- Google Experiments that use their own in-house developed frequentist approach.

- 3rd party tools that use Bayesian-inspired testing.

1. Classical A/B Testing

How can you use such a test in the app stores?

There are some 3rd party tools that offer testing and use classical A/B testing as their statistical engine.

Prerequisites to set up a test

- Choose a significance level

- Set the baseline

- Choose an MDE (Minimum Detectable Effect)

- Choose a statistical power

- Use the above to calculate a sample size

The probability to get the prerequisites right

As we discussed in the example above, these three parameters that dictate the sample size aren’t bound to almost any principal. Everyone has the ability to change them and every change will drastically affect the result of the test even when the data are the same. There is no reason to particularly choose any specific parameter value.

How prone is it to errors or manipulation?

- Setting test parameters upfront in a manner which isn’t consistent with relevant factors, such as traffic characteristics, number of variations, etc. leads to inferring the wrong conclusion about the test results.

- One of the most common mistakes is to start running such a test without a sample size fixed in advance. This means the test results will be prone to ‘cherry-picking’ as the test manager could look at the results and wait until they reach significance and then close them. This will extremely increase the likelihood of a false positive.

Other drawbacks?

Usually, this method requires a very large sample size. To drive such a sample to a test using a 3rd party tool will cost tens of thousands of dollars per test.

2. Sequential Testing

How can you use such a test in the app stores

There are certain 3rd party tools that offer testing and are using sequential testing as their statistical engine.

Where did this method come from?

Let’s set history straight: According to online resources “The method of sequential analysis is first attributed to Abraham Wald with Jacob Wolfowitz, W. Allen Wallis, and Milton Friedman while at Columbia University’s Statistical Research Group as a tool for more efficient industrial quality control during World War II…”.

Yup. This method originated as a method to detect faulty production lines during World War II. Sequential testing helped production managers to detect abnormalities in their production lines as early as possible, or in other words, to understand the rate in which these production lines produce faulty products (originally guns, tanks, and other products) and alert them if there’s something wrong in a specific line. Interestingly enough this approach was adopted by some in the app store testing market.

How does it work?

The method is based on continuously collecting samples and early-on detect if there’s a winner which allows you to stop the test early (before reaching some fixed sample size decided for the test).

It’s different than classical A/B testing in the sense that it allows you to ‘save’ samples in certain scenarios (which will dive into in a sec). That being said it has many drawbacks, some of them relate to the nature of the method and some of them relate to the same drawbacks of classical A/B testing.

Prerequisites to set up a test

Set a fixed sample size by deciding and setting:

- Significance level – The same ‘loaded’ term we discussed before which many in the world choose to be 5%, for no good reason other than routine.

- Minimum detectable effect – In this approach, it’s easy to intuitively understand what this is. Originally it’s the acceptable threshold for production lines fault rates. In the world of app store testing, it loses its meaning as there is no such threshold (wouldn’t you be happy with a 2%, 3%, 4% increase in performance)?

- Statistical Power – The same term from classical A/B testing most of the world choose to be 80%, again for no good reason other than routine.

- Baseline Conversion Rates – To calculate the size of the sample needed to drive to the test you need to set the baseline conversion of your app store page, which is, of course, impossible to do before starting to sample a traffic source.

The probability to get the prerequisites right

As a mobile marketer, different combinations of these parameters could and often will result in a different conclusion (different winners, or no winner at all).

In addition, estimating your conversion rates in advance is pretty much impossible, and if you’re wrong, which you’ll only know after starting to run traffic to the test, the sample size that you originally set will be invalid, making the test invalid in turn.

Some tools actually try to “hack” this by updating your sample size as the test progresses (by looking at what are the actual baseline conversion rates). This is a strict violation of the principle of setting a fixed sample size in advance, leading to ‘cherry-picking’ or changing the “stopping rule” to fit your needs, creating very skewed results.

How prone is it to errors or manipulation?

- Setting the parameters upfront is an impossible feat. The parameters will control how long the test will run which could result in different winners as a function of the incoming traffic at each time point. This alone opens up much room for debate on the validity of the results and clearly shows how prone these tests are to yield false results (either a winner that is not a real winner, or a failure to find a winner when in fact there is one).

- Sequential testing is not a great fit for app store page testing as the weakest points are, quoting from Evan Miller who wrote an extensive article on this:

- “The procedure works extremely well with low conversion rates. With high conversion rates, it works less well, but in those cases, traditional fixed-sample (i.e. classical A/B testing) methods should do you just fine”.

- “The sequential procedure presented here excels at quickly identifying small lifts in low-conversion settings — and allows winning tests to be stopped early — without committing the statistical sin of repeated significance testing. It requires a tradeoff in the sense that a no-effect test will take longer to complete than it would under a fixed-sample regime”.

- “The procedure works extremely well with low conversion rates. With high conversion rates, it works less well, but in those cases, traditional fixed-sample (i.e. classical A/B testing) methods should do you just fine”.

These two weaknesses are exactly the phenomena that are prevalent in-app store page testing. The average conversion rate of most traffic sources used with app store testing (using a 3rd party tool) is usually not low (Evan Miller points out to low as being sub 10% and in many cases sub 5%). This means these tests won’t actually close faster than other methods. Moreover, in cases when there isn’t a clear winner, these tests will take much longer to conclude. In many phases of testing as a mobile marketer, you face tests that show that users are indifferent to different variations, which are tests that contribute a lot to your learning towards the next tests.

Other drawbacks?

Above all, a testing method that requires you to veer away from your core competencies, and takes a lot of effort from actually thinking creatively about messaging and getting to know your users and puts it into figuring out statistical parameters, isn’t a positive contributor to your efforts in the long term. Why should you solve testing on your own?

3. Google Experiment Testing – Scaled Installs

How does it work?

Google Play Custom Store Listing Experiments allow you to set up to three variations (not including the mandatory current store design), set the percentage of traffic that each variation will get and then start running the test on 100% of the population that fits the test criteria (either global, by country, or by language).

After the test starts running, at some point Google Experiments show you several metrics for each variation:

- Actual Installs – The actual number of installs that the variation generated.

- Scaled Installs – The theoretical number of installs that the variation would’ve gotten if it was set to receive 100% of traffic.

- Retained Installs (Day 1, Day 7, Day 15, Day 30)

- Confidence Interval – The range of lift/decrease from the current variation in which the “true” lift value lies. The confidence level is 90%

Prerequisites to set up a test

- Deciding on the percentage of traffic that each variation will get. There is also no constant sample size set in advance, but the use of the Confidence Interval metrics, which points to the fact that Google’s approach here is frequentist.

The probability to get the prerequisites right

As there are no statistical test related parameters to set, it seems like Google Experiment is a black box, and there is no clear visibility into the statistical calculation they make on the data to get those Confidence Intervals. That being said, without a fixed sample size, the test is prone to the Cherry Picking errors, which means the test managers will keep on checking the results of the test until they feel it’s conclusive, usually when the results serve their purpose.

How prone is it to errors or manipulation?

- The statistical validity of the confidence interval is questionable – as it is based on the scaled installs metric which isn’t real, but theoretical. A frequently observed phenomenon in the industry is a negative correlation between the impression volume and conversion rate. The higher the impression volume, the lower the conversion rate. So assuming scaled installs will grow linearly from the actual installs figure is quite a big assumption. Another drawback here is that inflating the metric values also lowers the variance, which makes it easier to reach significance.

- We don’t really know how Google calculates the confidence interval, which means we don’t exactly understand how error-prone is that calculation.

- The lack of a fixed sample size opens up each experiment to a significant human-error which we pointed out to. This is the ‘cherry-picking’ error, or the constant checking of test results prior to closing it once the results satisfy the test manager.

Other drawbacks?

The fact that Google Experiment uses a significance level of 10% means that the results they do visualize through the Confidence Interval are supposedly 90% likely to be correct which renders many of the results you see pretty much useless as a decision-making tool, and this is the best-case scenario, as we can’t validate their statistical assumptions.

4. Bayesian Testing

How can you use such a test in the app stores

StoreMaven has developed the StoreIQ methodology that is based on Bayesian statistics.

How does it work?

The world of digital experience testing is moving fast into Bayesian statistics and inference. Even Google themselves, with the development of the world-leading testing product for web experiences, Google Optimize 360.

Wait, what? Didn’t we say that Google Play Custom Store Listing Experiments is using frequentist statistics?

Yup, although we can’t know what goes on at the back end of Google Experiments, they’re relying heavily on a frequentist methodology for inference. There could be many reasons for that, but above all Google is a very large company with hundreds of products, and some of these products get more development resources and some less. Implementing Bayesian statistics and inference in a testing product requires immense computation power and infrastructure investment.

Google explains it better than us here: Google’s Optimize Methodology Explained.

So, what is Bayesian inference?

Bayesian inference is a fancy way of saying that we use data we already have to make better assumptions about new data. As we get new data, we refine our “model” of the world, producing more accurate results.

Bayesian Inference works differently than frequentist testing that is heavily focused on providing a p-value. In fact, Bayesian Inference doesn’t require a p-value at all. The process looks something like this:

- Collect raw data, in our case observations on users landing on one of the app store page variants and tracking whether a user chose to install or not.

- Estimate the conversion rate (CVR) distribution of each variation using the data collected. The distributions will be instrumental in estimating which variation is the most likely to have the largest CVR.

- Regularly, collect more data and update the CVR distributions. In this sense, the model adapts to new data and updates our ‘view’ of how that variation CVR distribution curve looks like.

- As you collect more data the distribution curves of each variation become more clearly defined.

- Regularly compare statistics that relate to these distributions and compare the probabilities that one variation will generate conversion rates that are higher than all others.

- When we can conclude that one variation’s CVR distribution is clearly showing that it has a very small probability of surpassing the leading variation we can close down that variation, and stop sending traffic to it, which saves significant traffic costs.

- Once we conclude that there is a very high probability that one variation’s CVR distribution curve will lead to higher conversion rates we can clearly call a winner.

Prerequisites to set up a test

There are none that you need to worry about!

You read it right. Bayesian statistics don’t assume you know anything about the significance level, statistical power, the minimum detectable effect or the required sample size. Because… you don’t.

The probability to get the prerequisites right

As there are no prerequisites the probability to get them wrong is 0%.

How prone is it to errors or manipulation?

Among the available methods today, and the clear direction of the overall digital testing industry to move into Bayesian Inference models we strongly believe that this is the most accurate method to test app store pages.

Other factors to consider

- By using StoreIQ we save the most amount of traffic possible without sacrificing accuracy, as we can clearly see that certain variations CVR distribution curves are “far” from becoming a winner and stop sending traffic to them.

- This method isn’t sensitive to cherry-picking as the distributions tend to be more defined as you collect more samples, and there is no p-value that dictates the result of the test.

- You’re avoiding the possibility of changing test results by setting different significance levels, power, or MDE levels, they simply aren’t a part of the decision-making process.

Why it’s more crucial to make sure you’re not getting false positives with App Store Testing

It’s more about quality than quantity.

In the past era of app store optimization and marketing, many opted into testing very often, hoping to see a clear winner, to the bliss of the entire team. The results in the real-store were neglected as there were fewer ways to quantify the impact of creative updates.

Today that’s not enough. Many ASO teams and mobile marketing teams have started to treat it as organic user acquisition. As with user acquisition, the KPIs teams are being evaluated on are cold-hard install metrics, more specifically organic installs.

In this world, to succeed as a mobile marketer or as an ASO manager, you need to drive results, and in order to do that, one of the top tools in your arsenal is creative testing, enabling you to increase the conversion rates for the most valuable audience.

Spending hundreds of hours, and tens of thousands of dollars to run tests that are inherently flawed is like playing Russian-roulette with your career-growth. Why tie your success to testing methods that are known to produce a large chunk of false results?

There’s also the alternative cost of investing time in tests that produce questionable results. You have much better alternatives to spend your time.

In this landscape, we would recommend optimizing for quality tests instead of volume. As a marketer at your core, try to get to the truth – what creatives and messaging tend to convince more of your (highest value) audience to install your app. Long term, that’s the only way to succeed.