Millions of mobile apps and games are available in the saturated mobile marketplace, so optimizing your Google Play Store Listing or Apple App Store Product Page is a must if you want to gain a competitive advantage or differentiate your product from competitors.

Due to the intense competition that characterizes both platforms, it was a significant step forward when Google launched Google Play Store Listing Experiments as their own tool to help developers A/B test app store assets and identify which combinations of art and messaging drive the most installs.

What extent should developers rely on this tool?

Let’s start by explaining Google Play Experiments.

What is Google Play Store Listing Experiments?

Google Play Experiments, or GEx in short, is Google’s tool for app developers to test their Google Play Store Listing creatives with the goal of improving Google Play conversion rates.

By using Google Play Store Listing Experiments you can run real live tests on your Google Play traffic.

How does Google Play Experiments work?

Google allows you to run several types of experiments:

- Global experiment (main default graphics) – exposes all users to the experiment unless the current version has localized assets in which case only users with the language that was set to the default app language are exposed to the experiment.

- A localized experiment is by Language, which means that anyone with the designated language set to their Google Play app should be exposed to the experiment.

What’s the maximum number of localized store listing experiments that can be run at once in Google Play?

For each app, you can run one default graphics experiment or up to five localized experiments at the same time. Using a default graphics experiment, you can experiment with graphics in your app’s default store listing language. You can include variants of your app’s icon, feature graphic, screenshots, and promo video.

How to use custom store listings in Google Experiments?

Google also allows you to run Experiments on custom store listings. Custom Store Listing is a feature in the Google Play Developer Console that allows you to customize your Google Play Store Listing to target a country or a group of countries. This is opposed to the Localized Store Listing that targets by language, and not a geographical location.

You can set up to five Custom Store Listings and you can only target a country once in your Custom listings (you can’t use Italy on two separate custom store listings).

What type of experiments can you run?

Within Google Play Experiments you can test your:

- Icon

- Screenshots

- App Preview Video

- Description

- Long Description

- Short Description

You can’t currently test your title with Google Play Experiments.

How do Google Play Experiments conclude experiments?

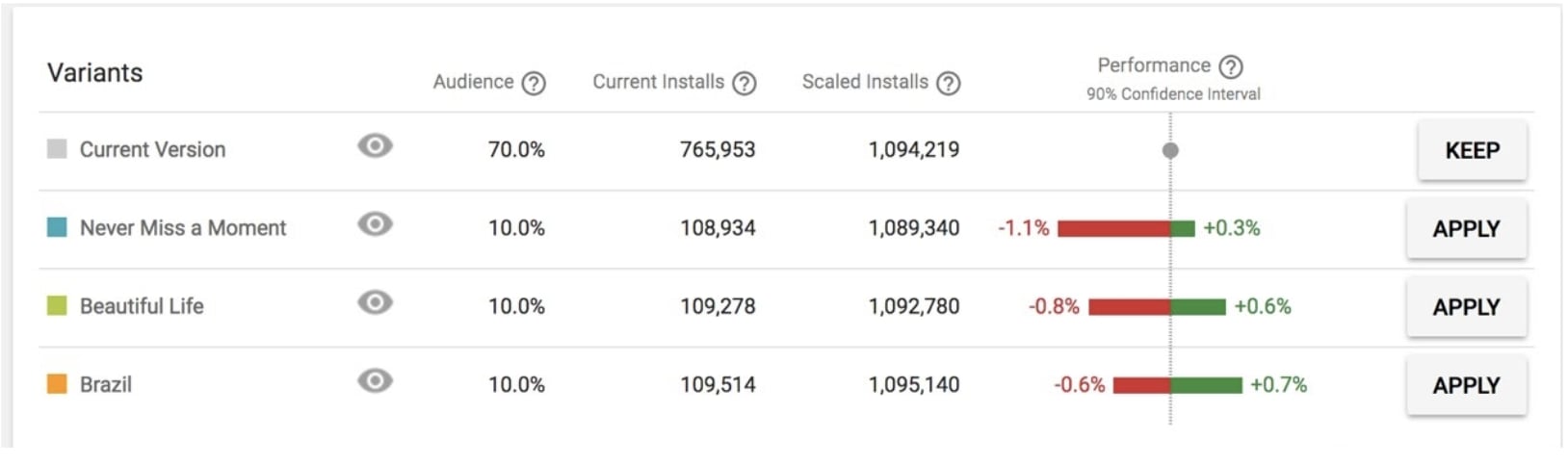

- Developers using this tool can test up to three variants against their current version to see which one performs best based on install and retention data.

- The variants are implemented within the Google Play platform, which means that all installs are real installs (more on that later).

- Developers choose what percentage of their traffic to allocate to their current version and the subsequent percentage of traffic is allocated evenly to the other variations.

- The accrued data are installs per variation per hour. A statistical test is performed over an inflated measure of the recorded number of installs such that installs are divided by the percentage allocated to their respective variation. It is not documented which statistical hypothesis is performed.

- A Confidence Interval is calculated for non-current variation comparing installs of all other variations to the current version by using the measure “scaled installs”. The moment a confidence interval falls entirely within the positive side of zero, the test is deemed conclusive. Otherwise, the experiment will state that not enough data have been collected or the test ended in a tie. If the confidence interval for all variations falls entirely on the negative side of zero, then the test is set to be concluded.

Let’s continue – Should you rely on this tool?

Although it offers a handful of advantages as developers begin to improve their Store Listings and test the waters of optimization, many of these benefits aren’t as significant as they seem. In fact, we analyzed thousands of Google Experiments and found that only 15% were successful in finding a winning variation that wasn’t the Control.

Based on our experience working with Google Experiments and helping mobile publishers test creatives on our platform, we wanted to set the record straight about several misconceptions about the tool, specifically what it can help you accomplish and where it lacks in being a sufficient, standalone app store optimization (ASO) platform.

Google Play Store Listing Experiments Misconceptions:

#1: There is No Cost to Use Google Experiments

On the surface, developers aren’t paying anything extra to conduct Google Experiments tests. It’s a free tool within the Play Console that’s accessible to all Android developers.

Caveats: While there’s no monetary cost, you are sending a significant percentage of your live traffic to variations that you haven’t tested, and you don’t yet know how they will perform. On one hand, you could see high reward and put out a variation that brings in a lot of installs. On the other hand, it’s risky to showcase a weak variation that could cost you real installs.

Additionally, while Google Experiments allow you to determine the percentage of traffic that is sent to each variation, you don’t get to choose the traffic segment that is sent to each variation. While this is more similar to how the real app store works, many developers use testing platforms to segment their users based on specific criteria to isolate the high-value users, who will have significantly more lifetime value (LTV) or higher retention, from lower quality users who won’t contribute to the bottom line.

To take it a step further, you have no way of knowing which audiences you’re converting on Google Experiments and whether they will drive the most long-term value. On testing platforms, you can take these business considerations into account and identify the unique interests and preferences of high value users before deciding which variation to put in the live store.

#2: You Can Drive Long-Term Improvements in Conversion Rate (CVR)

On Google Experiments, you can conduct tests on a variety of assets including Icon, Poster Frame (Video thumbnail), Short Description, Long Description, Video, and Screenshots. When you look at the results in the Google Play Console, you can see which variation received the most installs (i.e., the “winner”).

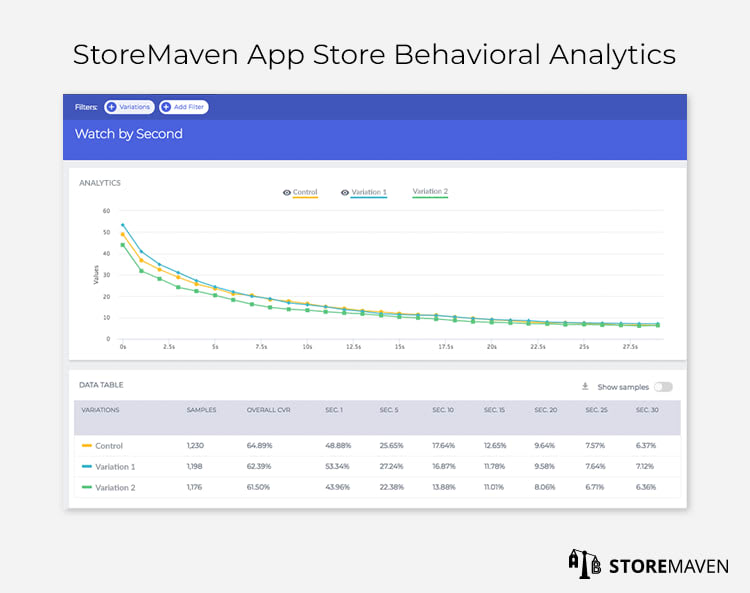

Caveat: However, one of the biggest (and most significant) questions missing in these results is why. Why did one variation perform better than another? What specific creative or message convinced visitors to install? The key to pulling valuable learnings is analyzing app store engagement data, which is most effectively tracked by third-party app store testing platforms. It’s difficult to make iterations on the winning variation and drive additional CVR improvements if you don’t understand the reasoning behind each win.

For example, if you’re testing different Icons, you’ll need to understand more than just which design drove the most installs. You’ll want to know what impact each version has on visitor behavior and overall conversion rates.

Perhaps one Icon variation led to higher decisive behavior (people quickly making a decision based solely on the assets they see above the fold in the First Impression). Alternatively, maybe another variation caused visitors to explore more of your Store Listing because the Icon piqued their interest and made them want to learn more about your app. In some cases, we’ve seen that the combination of Icon with the Screenshots visible in the First Impression can work together to significantly impact engagement and CVR (both positively and negatively).

Even though Google Experiments will find a “winner,” you need more granular insights beyond the number of installs to create a long-term ASO strategy.

#3: You Can Uncover Adequate and Actionable Insights

Based on the winning and losing variations in Google Experiments, you can hypothesize what your general audience responds to and can make educated assumptions as to what worked or didn’t.

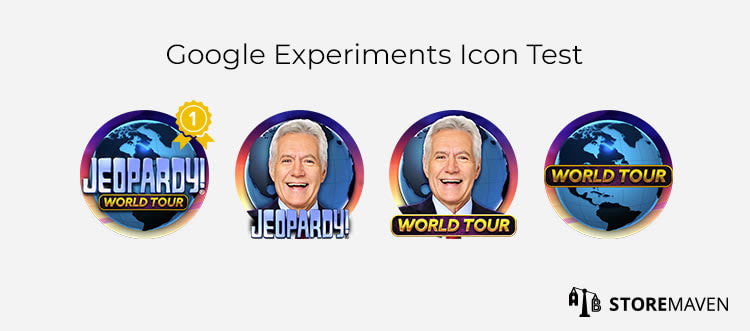

Here’s an example from a Google Experiment Icon test that Sony ran for Jeopardy! World Tour…

The experiment tested four Icon variations, and after a certain period of time, one of the variations began to receive 100% of traffic. We can assume this Icon was declared the “winner.”

Given the design of the winning Icon, it’s possible to hypothesize that showcasing the show’s branding in the form of its logo is important to installers, even more so than highlighting its well-known host, Alex Trebek. You can also assume that just displaying the text “World Tour” doesn’t have enough recognizable branding to pull in Jeopardy lovers who will probably install this game.

Caveat: As with the previous misconception, though, you don’t have enough data to support, reject, or even build on your hypotheses.

Are visitors immediately dropping if they don’t see the show’s host or logo in the Icon? Are visitors installing at a higher rate when they see the show’s branding? How does the Icon complement or clash with other assets above the fold; does this impact exploration or conversion? What types of users are you converting with this Icon, and are they an audience you’re trying to attract? Most importantly, though, what should you test next given the results of this test? Without analyzing these granular engagement metrics, any insights you try to pull from Google Experiments results are just assumptions that only serve as initial learnings but not as conclusions you can rely on.

This is especially true for Videos. If you’re testing the presence of a Video or even testing different Videos against each other, you need more than just assumptions based on number of installs to create actionable insights. On an app store testing platform, for example, you can see the drop and install rates at any given second in a Video. If you understand which exact content and messages drove the most installs, you can optimize the Video for the next tests and display the most impactful content at the beginning to capture installs before visitors stop watching.

You might even find that a Video in general doesn’t work for the audience you want to target, and a variation with no Video converted the highest quality users. Hypotheses and insights based on the results of Google Experiments can only take you so far. You need data-supported insights to better inform your hypotheses and tests.

#4: Sufficiently Accurate Statistical Method

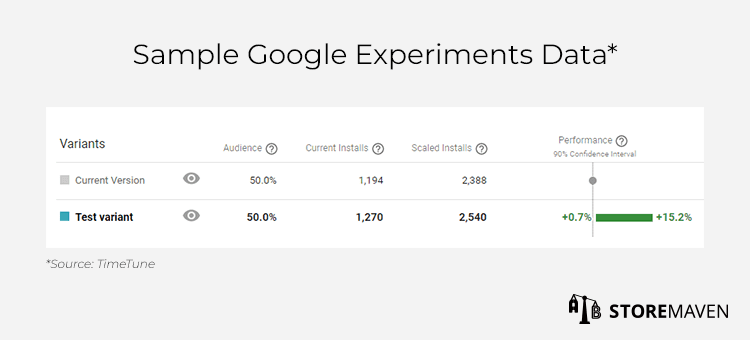

Store Listing Experiments operate with 90% confidence that the results are correct. The results are displayed on a rolling basis until Google conclude the tests and declare a “winner.” In the Play Console, you can see the percentage of visitors that was shown each variation, current installs (number of actual installs for each variation), scaled installs (number of installs you would have received had only one variation been running), and performance (which shows the range of performance on a 90% confidence interval).

Caveats: While we aren’t saying Google’s method is invalid, the lack of understanding about how Google use their algorithm to determine winners makes the tests’ validity more questionable. Not to mention the uncertainty as to how and when Google Experiments show the different variants. Are they showing them at the same time of day and at the same frequency? Each of those factors would greatly skew the results.

Additionally, in a sophisticated testing platform, you get a comparison of how variations performed in relation to each other instead of just comparing the variations to the control, as is done in Google Experiments. By comparing variations against each other, you’re able to generate even more insights. For example, even if you found that Variation B won against the Control, you may still uncover unique insights by comparing Variation C to D to understand which hypothesis of the two was better.

There is also the inaccuracy of the “scaled installs” metric that makes false assumptions. There are too many unknowns to know or even estimate what the number of installs would be if the variant got 100% of all traffic, so this metric is less reliable in predicting sustainable CVR uplift.

Lastly, you aren’t shown the number of Store Listing views in the results. By only seeing the number of installs, you don’t have a full picture of what’s really happening in the store, and you’re missing valuable conversion rate metrics. This is why we use Bayesian statistics for more accurate ASO testing.

Why You Should Use Google Experiments with a Testing Platform

Even though Google Experiments as a standalone tool has its drawbacks, that’s not to say developers should never use it. The most effective way to leverage Google Experiments is to utilize it as a supplementary tool to the tests you run on a platform. In addition to addressing the above issues with Google Experiments, testing platforms are crucial for…

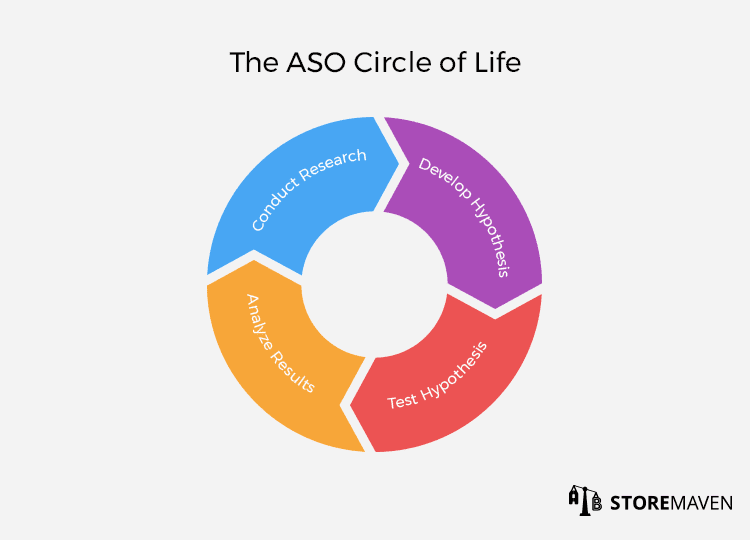

Long-Term App Store Optimization (ASO) Strategy Building

One of the reasons leading mobile publishers continue achieving success is because they’ve implemented long-term, sustainable optimization strategies. The unique insights and results they draw from each test are used to drive the research and hypotheses of the next test(s), and so on and so forth, thus creating an infinite “ASO Circle of Life.” However, if you’re only relying on Google Experiments, you don’t have sufficient learnings or data necessary to form the foundation of following tests.

For example, let’s say that testing on a platform revealed that your 4th Screenshot caused the significant spike in conversion. Based on this finding, you can use the next test to analyze how CVR will be impacted if you move this messaging further up the Gallery. If you only rely on the install metrics that Google Experiments provide, you’re missing key engagement data that will guide and direct your testing strategy.

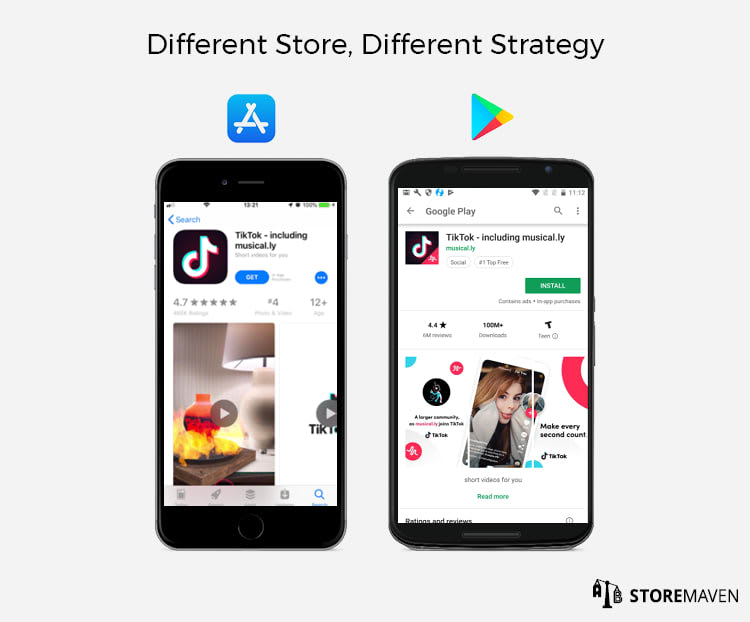

Developing Separate ASO Strategies for the Apple App Store and Google Play Store

It’s important that you treat the Apple App Store and Google Play Store as two separate platforms. In other words, you shouldn’t use the same creatives that win on Google Experiments on iOS. In fact, we’ve found that using Google Play creatives on the App Store can potentially lead to a 20-30% decrease in installs.

This is because:

- The overall design of the stores are still not the same (e.g., no autoplay feature on Google Play videos, image resolutions are different, etcetera)

- Developers often drive different traffic to each store (i.e., different sources, campaigns, and ad banners)

- Different apps are popular in each platform so competition varies

- The user base for Google is not the same as iOS—user mindsets and preferences are fundamentally different

What Does This Mean For Your ASO Strategy?

As a standalone testing platform, Google Experiments is not a sufficient tool on which to base your entire ASO strategy.

The surface-level results you receive from each experiment are beneficial in giving a generic sense of whether certain creatives work better than others, and this is an important aspect of optimization. However, to fully maximize the potential of Google Experiments, it’s important to use data and insights from a third-party testing platform to uncover why certain creatives work better and how to continue building off of each test. This will enable you to create a more sustainable ASO strategy that gives you a competitive advantage in your category.

—

StoreMaven is the leading app store optimization (ASO) platform that helps global mobile publishers like Google, Zynga, and Uber test their Apple App Store and Google Play Store marketing assets and understand visitor behavior. If you’re interested in uncovering crucial app store visitor engagement data and optimizing your current app store creatives, schedule a demo with us.