In my last post, I called a bluff on A/B testing calculators and exposed the statistical ways in which many App Store Optimization (ASO) A/B testing methods and tools are grossly inaccurate. I further provided information on why StoreMaven’s algorithm, StoreIQ™, has proven to be the most statistically sound and effective way to test app store creatives.

In this post, I will dive in a bit deeper and explain the statistical fundamentals that make StoreIQ™ more accurate for ASO testing.

Really, it all boils down to an old philosophical statistics debate…

Frequentists vs Bayesians App Store AB Testing

Outside of the scientific community, little is known about the old feud between two schools of thought in the world of statistics: the Frequentists vs. the Bayesians. There are countless skirmishes that are conducted on an international level for the right of deciding the proper methodologies to be used in the statistical analysis process.

Is this merely a case of the People’s Front of Judea vs. the Judean People’s Front (Monty Python’s Life of Brian, 1979)?

Well, it all depends on the context and how statistically accurate you are looking to be. And if we’re talking about your app store CVR, you likely want to be accurate.

Frequentists adhere to inference through first modeling the phenomenon they wish to analyze (e.g., CVR distribution). They assume that a phenomenon has a probability of occurrence given an “infinite” amount of samples (practically speaking, the concept of infinity in tests that rely on empirical observations is obviously impossible, so a very large sample size must suffice in lieu of infinity). They then continue to perform their analyses based on this assumed model and observations (e.g., amount of installs and impressions). In short, they draw conclusions based solely on data available from the current experiment. A/B tests, t-tests, z-tests, ANOVA, and many others are based on this approach.

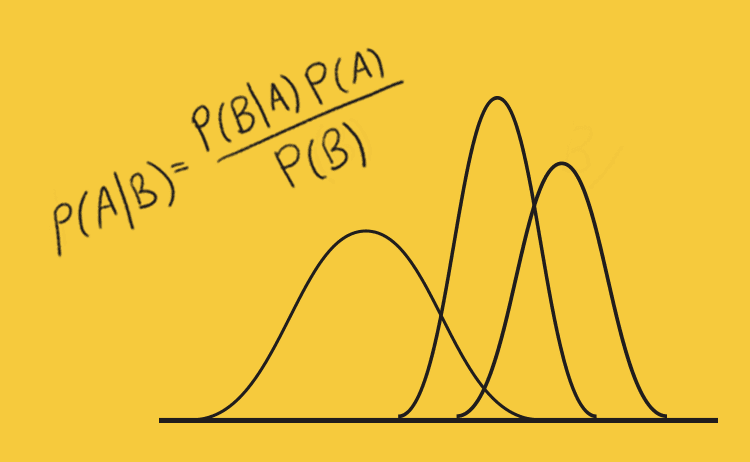

The Bayesian approach is similar but not quite the same. It is named after the famous mathematician Thomas Bayes (1702 – 1761) who formulated the famous Bayes’ theorem.

This theorem helps us calculate probabilities of events given that some related events have already occurred. The major contribution here is that it allows for calculating probabilities in a realistic setting where we take into consideration related events (i.e., when we are more informed). Simply put, the Bayesian approach introduces into the statistical analysis the notion of prior related knowledge that we have obtained regarding the tested phenomenon.

So, are these two approaches significantly different?

The short answer is yes, and this is precisely why we, at StoreMaven, use the Bayesian approach in our StoreIQ™ algorithm.

Why Bayesian Statistics Are More Accurate for ASO Testing

As I stated earlier, the Frequentist approach assumes an infinite sample size, which means that at the very least it requires a very large sample size for its inherent approximations to work. Sometimes we simply don’t have that amount available. In addition, it leaves out prior related knowledge that may greatly contribute to understanding the phenomenon through the available data.

Utilizing the Bayesian approach, we can start the test with knowledge that we have already gained and continue the test by constantly updating our estimations as the test progresses. This method is known as Maximum A-Posteriori (MAP). It allows us to fine tune estimations and achieve more accurate results.

In the case of ASO, the commonly used method of A/B testing, is rather stringent in its nature. It assumes the distribution model is unchanged throughout the test, regardless of whether the CVR rankings for the different variations tested are constant or volatile.

Let’s compare the distribution models of both methods…

In A/B testing, you use a proportion difference test on the CVRs of two variants. First, you must make sure that you have enough samples before performing your calculations. Calculations are then performed once and therefore are based off a single model to describe the data. This is problematic because it’s not representative of data that may change over time and/or can be affected by other external factors.

When using the MAP method, we constantly resample data and calculate the updated CVR distribution model using current and past data.

Here’s a rough illustration of the distribution shapes:

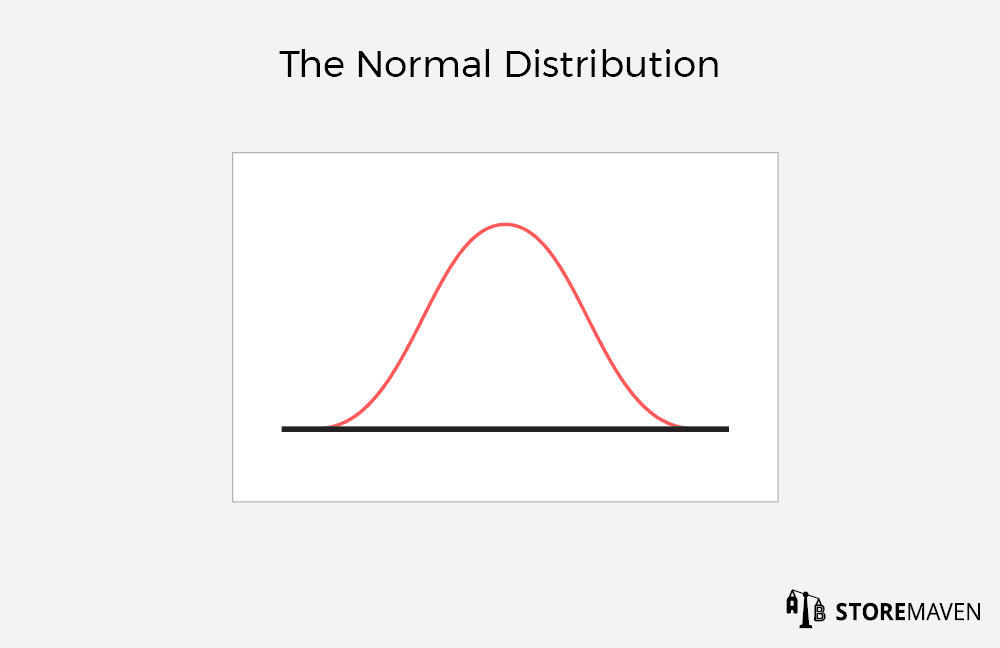

A/B testing uses a normal distribution model, as portrayed above.

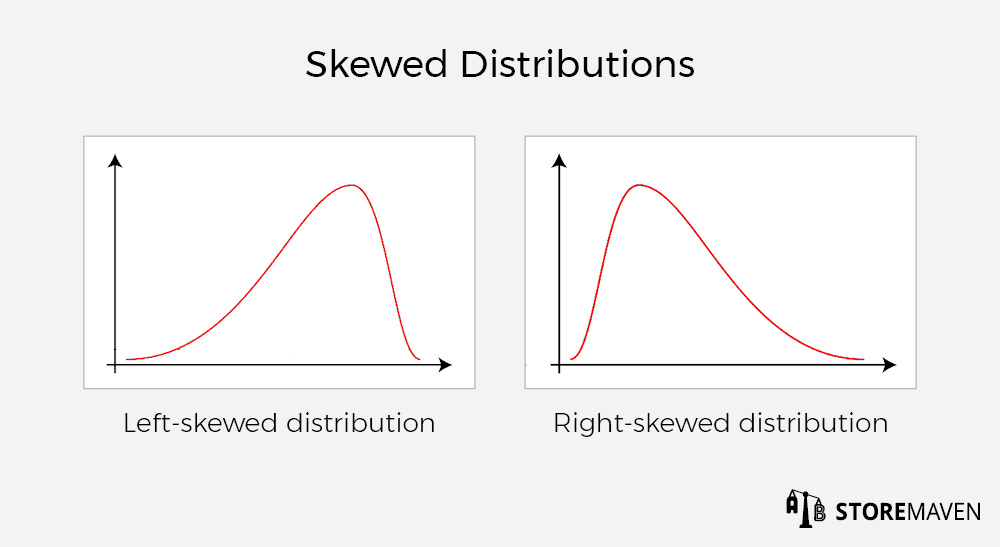

MAP calculations result in the model distribution taking any shape that may be considered relevant for the observed data (current and past).

It can look like the normal distribution or like a skewed distribution, as portrayed above.

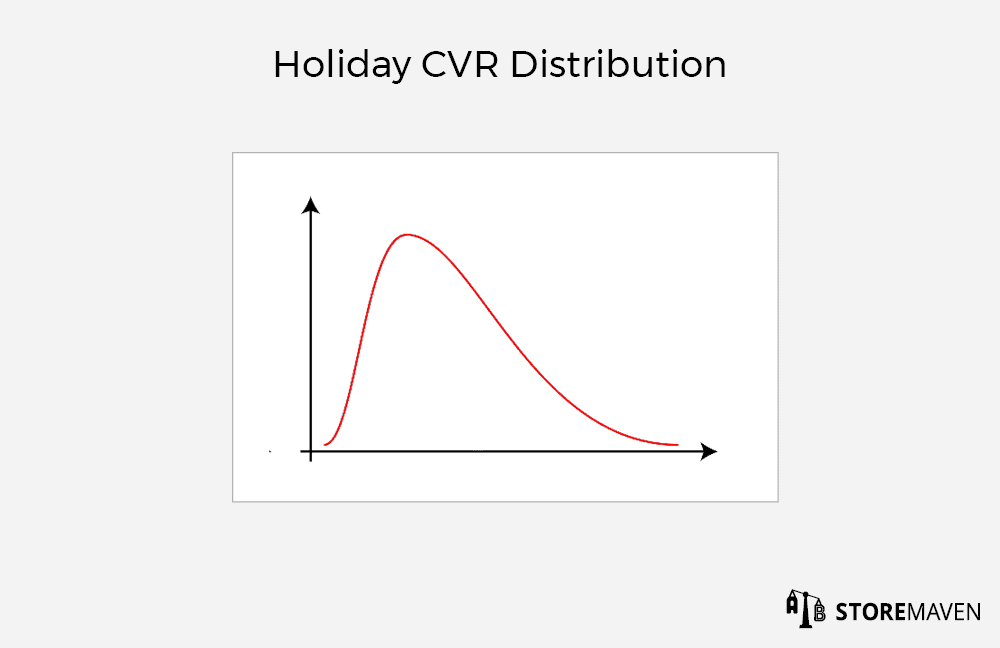

MAP calculations allow us to adjust the tested parameter. For example, let’s say that a popular app starts testing on a holiday. Due to the fact that it’s a holiday, they may see many impressions but few installs. The observed CVRs are therefore respectively low.

Using MAP, the model for this distribution would be right tailed, signifying that it has a much higher probability of getting a low value than a high value. As the test progresses, beyond the holiday, we see that the CVR is actually much higher, since it’s a very popular app.

Notice how the distribution will therefore be adjusted to become left tailed, as portrayed above.

Now, if we were to perform an A/B test, no adjustment would be made at all, the final model would be based on the normal distribution and all observed data will be valued with equal importance when calculating the test results.

It’s important to emphasize that this is not what makes the A/B test invalid, as slow days exist in reality and can’t be disregarded entirely. It only means that enough samples should be taken to make sure that these occasions are properly represented as they occur in real life—and that could translate into a very large sample size.

What makes StoreIQ™ smarter than your average testing algorithm, is that it considers the implications of running ASO tests that are affected by time considerations. It does so by intelligently adjusting the statistical models that are used to analyze the data with Bayesian calculations. However, time consideration is not the only concern in ASO and certainly not the only factor that StoreIQ™ takes into account. It also leverages data from sources that we have gathered across hundreds of apps and years of testing, to achieve better estimations and even more accurate results.

I would be happy to answer any questions you may have so feel free to reach out [email protected].