Intro: What is an app icon?

An app icon is a visual representation of your brand or product. It is the first element that users see when they visit the iOS app store or the Google Play store.

An app icon is the only creative element that appears throughout the user journey in the app stores. For organic users that find an app or game through top charts, search, or featuring placements, it appears in every listing. For paid users that land directly on an app store page from an ad, the app icon is one of the main visual assets they see on the first impression.

Why are app icons important in ASO?

Why are app icons important in app store optimization? The app icon is your app’s first impression. A quick glimpse of a mere second at your app can tell so much: What the app used for? What’s the purpose of the app? Do I want any relationship with this app?

In the case of the app stores, there’s a lot you can do to change and affect that first impression. In this article, we’ll teach you about what is inarguably one of the most crucial parts of your app store optimization (ASO): the app icon. It will explore how significant the icon is to your ASO strategy, the impact it holds over your conversion rates, and the components needed to benefit from a great icon.

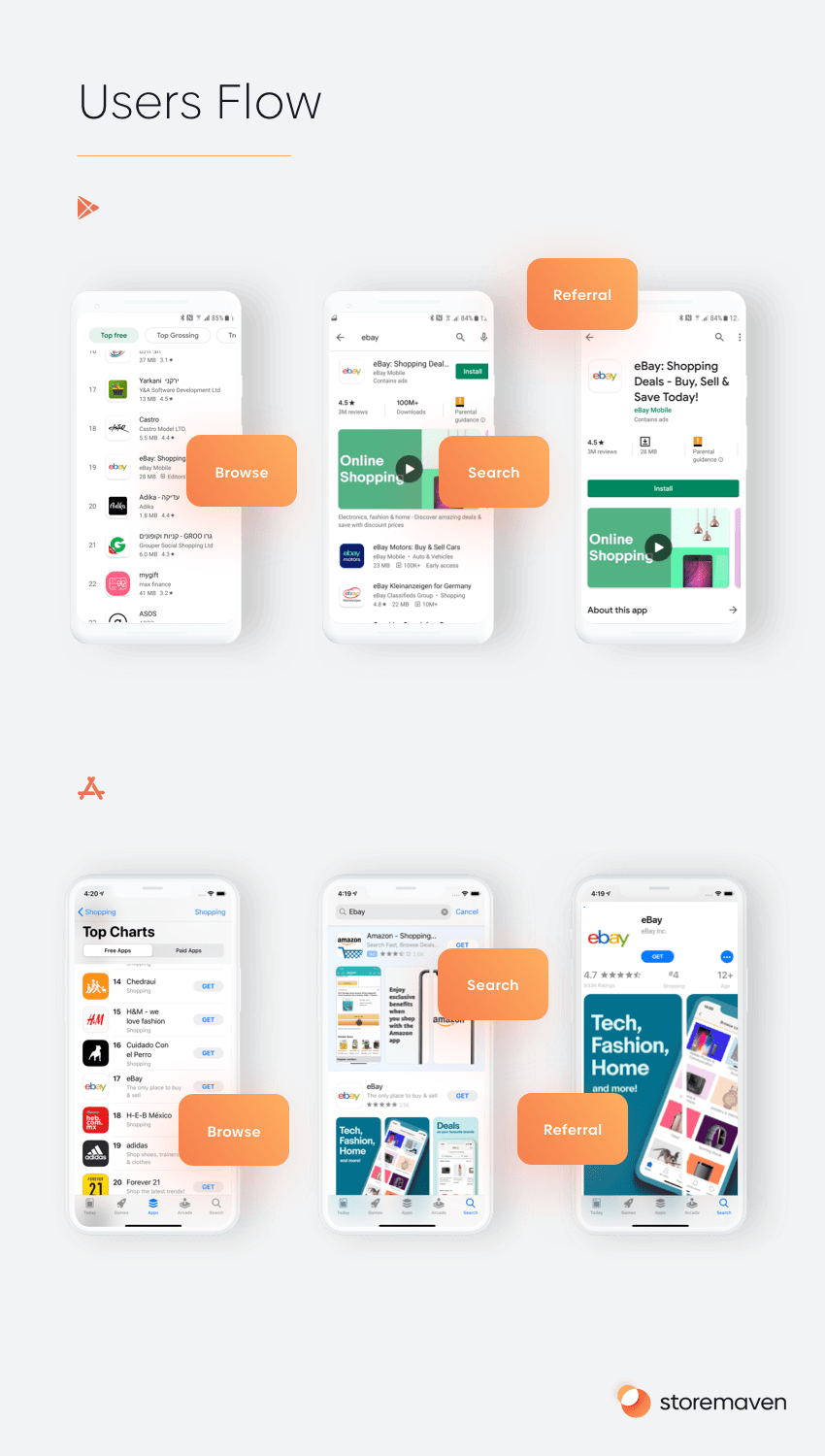

The role of app icons in user discovery

Consider the icon’s role: it’s the only element that appears throughout the user’s journey. It doesn’t matter if that journey takes the user from a featuring placement straight to your app, from the search result page, or even from an ad on Facebook.

Moreover, the icon is the only visual element that sticks with a user after they install the app, as it is on their home screen; thus, it impacts engagement/app opens as well.

You will find the app store icon across all different pages. Regardless of what journey the user is taking, they will meet the icon in their path: on the featured page, the top charts, the category page, the app of the day, top games, the ‘for you’ page, editor’s choice, the daily list, when exploring, browsing, searching, or being referred. And since 70% of users are decisive ones—those who decide whether to install an app or not within three seconds—your icon may very well be the only thing their eyes will look at during this close, short encounter with your app. Therefore, app store optimization of your icon is imperative.

Optimization: The App Icon’s impact on conversion rates

If you’re interested to learn more about the impact of App Store creatives on user behavior, we got you covered. We researched 500M users to uncover how they behave on the App Store and which asset are the most important ones to drive installs. Get it below:

The app icon has a profound impact on visitors throughout all areas of the funnel; plus, it has a lasting impact on re-engagement since it can act as a reminder for users to open the app when they see it on their smartphone home screens. In the Apple App Store, the icon is the most dominant marketing asset in browse pages (i.e. today page, games page, and apps page). It also plays a big factor in search results and in the actual product page. For the App Store, the median potential conversion rate (CVR) for icon optimization is 18%.

In the Play Store, the app icon plays an even greater role because, in addition to browse pages, the icon is the only creative marketing asset shown on the search results page. The median potential CVR lift for icon optimization is 11%.

App Icon design: Choosing the right style and message for your icon

Icons appear in different places in the funnel. They serve different purposes depending on the step in the funnel and the app.

App icons can also help create an emotional connection with the app and can support/reflect general marketing campaigns. If the app is developed based on intellectual property (IP), the icon serves to prove that this is the “official” app and makes the message more powerful, using creative assets that users easily connect to and identify with. It can also be used to demonstrate a value proposition (for example, in the social casino category, many developers feature a joining bonus in the icon, but it might also attract low-quality traffic that’s only interested in “free stuff”).

What types of app icons are there and what types are being used?

There are multiple creative directions to pursue when designing an app icon. You should start with developing the right hypotheses around the desired design style and messaging and then testing it to see how it performed. If one of your hypotheses suggests that visitors prefer to see a specific feature of the app rather than an emotional experience of using the app, then the design of each icon variation should bring that statement to life.

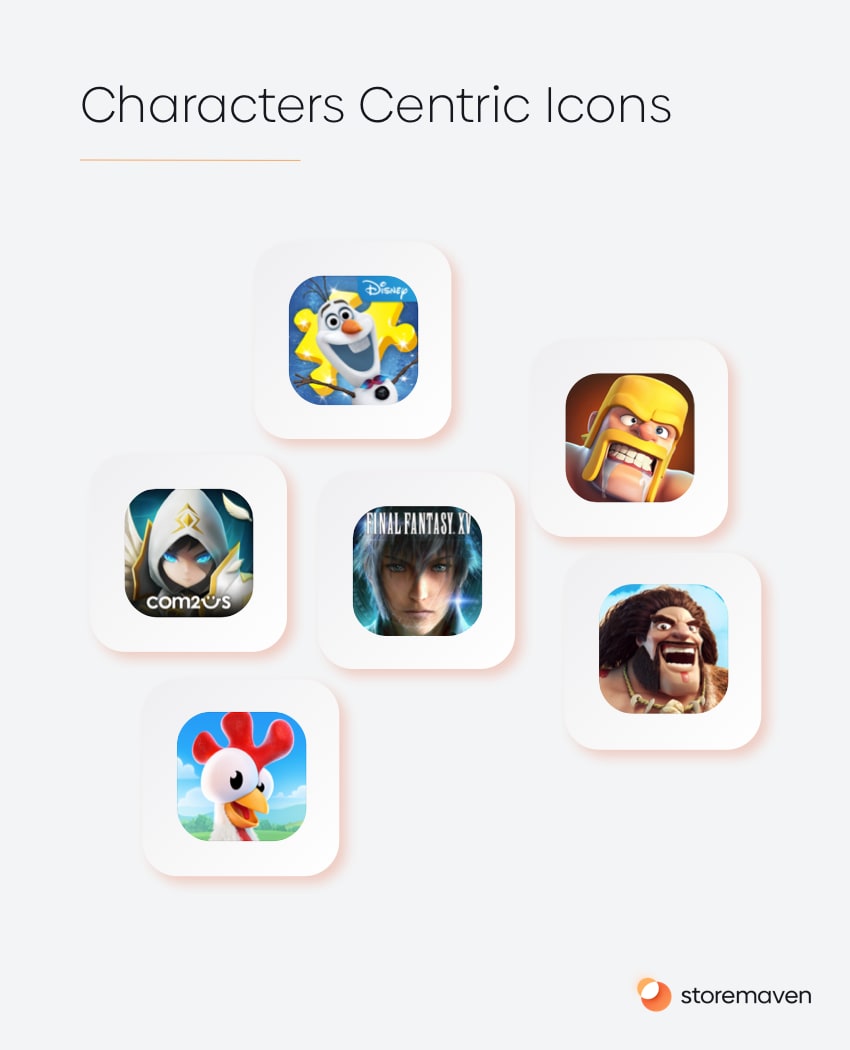

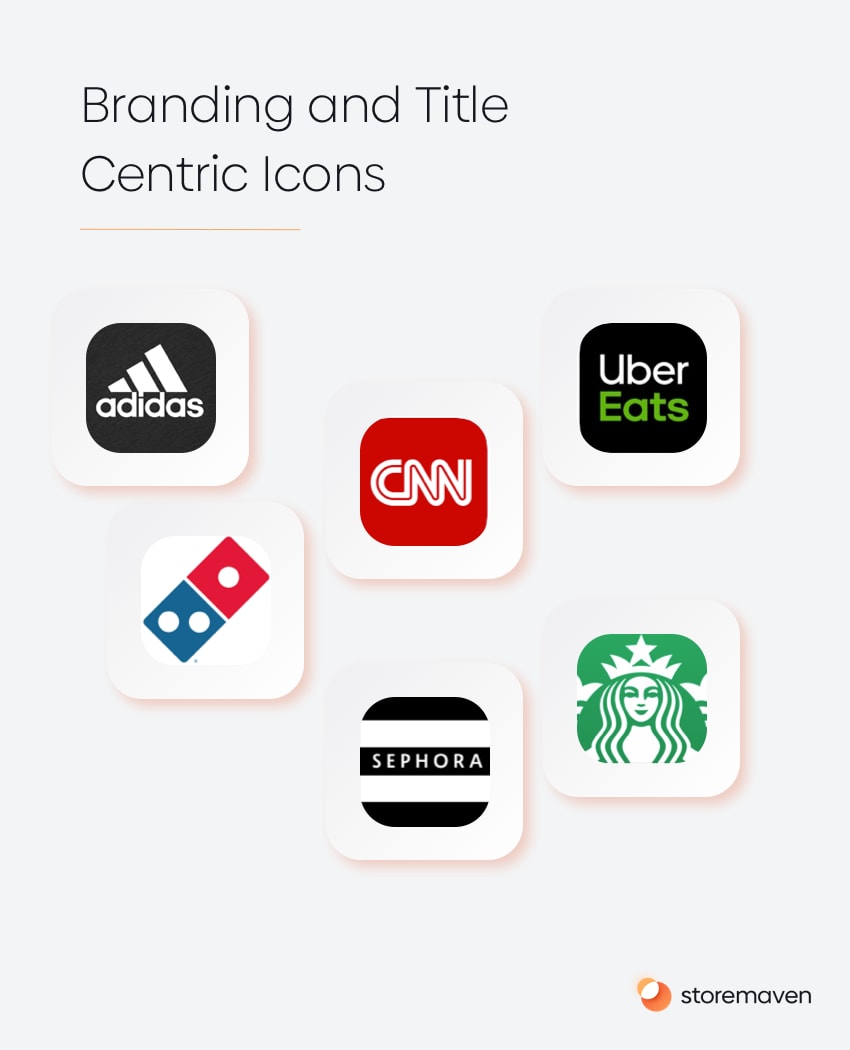

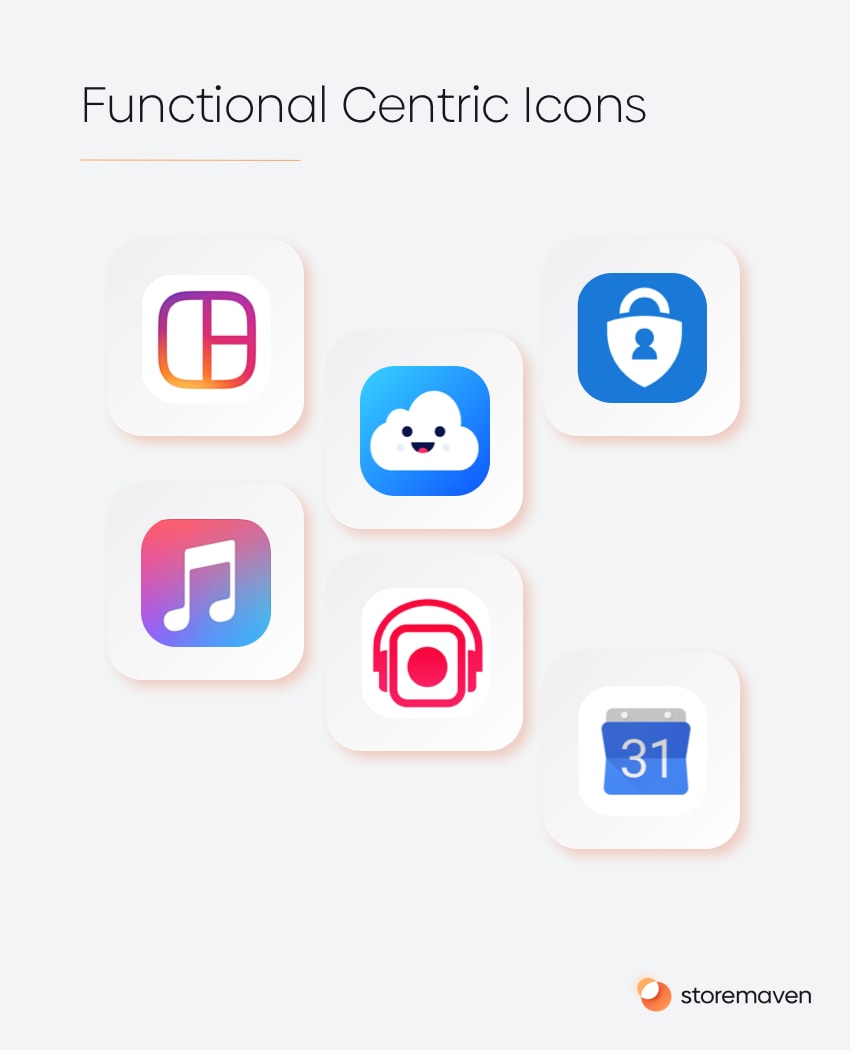

Look at these examples of different design styles and ideas for additional application testing of icons within each style:

Character centric: This app icon users immediately connect with a recognizable character. You can choose between using a single character or multiple ones, whether to include text, or having a branded character.

Branding centric & title centric: The same app icon concept is used here—users will recognize the brand within a second and will engage with it based on brand awareness.

Functional centric: When looking for an app, users are searching for a solution. Here, they will understand the core functionality of the app from the image that connects with what they’re looking for.

Universal principles for designing app icons

The five core aspects of app icon design principles are based on Micheal Flarup, a well-known keynote speaker in the icon designs realm.

5 app icon design principles:

1. Scalability – Remember that the icon is going to be shown in several places throughout the platform and in different sizes. Don’t overcomplicate your icon or cram too much into it. A big part of the conceptual stages of creating an icon should be considering the design scale. Make sure you try out your icon on the device and in multiple contexts and sizes and remember that simplicity is the way to go—focus on a unique shape or element that retains its qualities when scaled.

2. Recognizability – Flarup compares the icon to a song and the importance of being able to identify it among all the noise of the store or your home screen. You basically want your icon to stand out and be easy to identify. Try to figure out what makes your app unique, but don’t overcomplicate the icon. Flarup recommends removing details from the icon until the concept starts to deteriorate and see if this improves recognizability. Also, try to deconstruct your favorite icons to see what methods they used and what caught your eye.

3. Consistency – Good icon design is an extension of what the app is all about—making sure that the two support each other will create a more memorable encounter and more easily stick in the mind of the user. For example, keeping the color palette of your interface and icon in line, such as a green interface reinforced by a green icon.

4. Uniqueness – Not a lot to elaborate upon here—look at what others are doing in your field, and then try to go in a different direction. Play with colors and compositions, and bear in mind that the world doesn’t need another checkmark icon.

5. Don’t use words, but… Well, here, our data shows otherwise. Yes, automatically going towards the written word isn’t putting your full design expertise into good use. But there are occasions when it is OK to use words on your icon. Users don’t like to read—this much is true—and usually, you should aspire to find a better way to visualize your app than just mentioning your app’s name one more time; yet, there are cases when your message is so powerful or your brand is so strong that you cannot avoid it.

App icon design – dos and don’ts

Here are some extra tips from Storemaven’s app store optimization experts—the dos and don’ts you should follow before starting to design your app:

Dos

1. Use an app icon that pops out but matches the unique voice of the app and looks cohesive with the rest of the page.

2. It’s important to make sure the icon looks good in different places in the funnel where it is visible: top charts, search results, the product page itself, and even on Facebook ads.

3. Make sure your app icon looks good in dark mode; we’ve seen many mistakes here.

4. A safe bet is to use icons that are easily recognizable by users. There are a few icons that enjoy mostly universal recognition from users, such as icons of houses, magnifying glasses, and envelopes. For example, the Gmail icon uses an envelope, which is universally associated with mail.

5. Given its crucial role within the user journey, there are multiple stakeholders that care about the icon, be it the brand team, the ASO team, the user acquisition (UA) team, and even product marketing teams that strive to re-engage lapsed users. When experimenting with a new icon or testing and improving a current one, make sure your goals are aligned with those of other teams.

6. Feel free to use an app icon design tool – there are plenty available – but this should accompany proper testing and understanding your user base. App icon design services can be helpful for smaller companies with smaller budgets.

Don’ts

1. Don’t use abstract icons—they rarely work well. Users can’t rely on previous experience to figure out the meaning behind the icon, even if that meaning makes perfect sense to you. The Game Center icon is a great example; an interesting, colorful icon but people usually wonder what it means.

2. Don’t include nonessential words. Repeating the name of the app or telling people what to do with it, such as “watch” or “play,” is useless. See section six again for the use of words; use them only when they’re essential or part of a logo.

3. Don’t include photographic details because they can be very hard to see in small sizes.

4. Don’t use 3D perspectives. Using 3D perspectives and drop shadows can make icons hard to recognize.

5. Don’t make icons merge with the background.

Why is it important to test App icons?

In ASO, icon testing is very important. As mentioned before, your App Icon has a profound impact on visitors throughout all areas of the funnel—from having a prominent place in UA (most likely Facebook) ads, the Search Results Page, top charts and niche featured placements on the Apple App Store and Google Play Store, to eventually being displayed on your full Product Page. Plus, it’s the only app store element that has a lasting impact on re-engagement since it reminds users to open the app when they see it on their smartphone homescreens.

Given its importance and effect on conversion and retention, you want to ensure you’re displaying an Icon that gives your app or game the best chance to succeed and drive conversion. Testing is one of the most effective, data-driven ways of doing this. In fact, through our work with leading mobile publishers, we’ve found that an optimized Icon has the potential to boost conversion rates (CVR) by up to 30%.

Since App Icons appear in a variety of areas, it’s not possible to test them cohesively throughout the funnel. However, this downside doesn’t eliminate the benefits of testing. There are still ways to measure each step of the funnel and improve your likelihood of long-term success.

App icon testing framework: Optimizing app store conversion rates

It’s hard to emphasize how important it is to master the testing process before moving on to making actual decisions. Understanding why B outperformed A in a specific test will allow you to make sure you’re getting the most value out of each and every test. Knowing where you stand compared to others in the industry and working with best practices as a guideline will stand you in good stead.

3 Questions for App Icon Testing

Here are 3 examples of important insights you would want to gain from testing your app icon:

1. What’s the impact of the icon on decisive users? Remember: decisive users are those who decide to install or leave without ever engaging with the page. The average time a decisive user spends on your page is between three and six seconds.

2. What’s the power of the icon in driving explorative behavior?

3. How does the icon fit the overall narrative you are trying to convey in the rest of the store? Does it increase conversion rates of explorative users or hurt them?

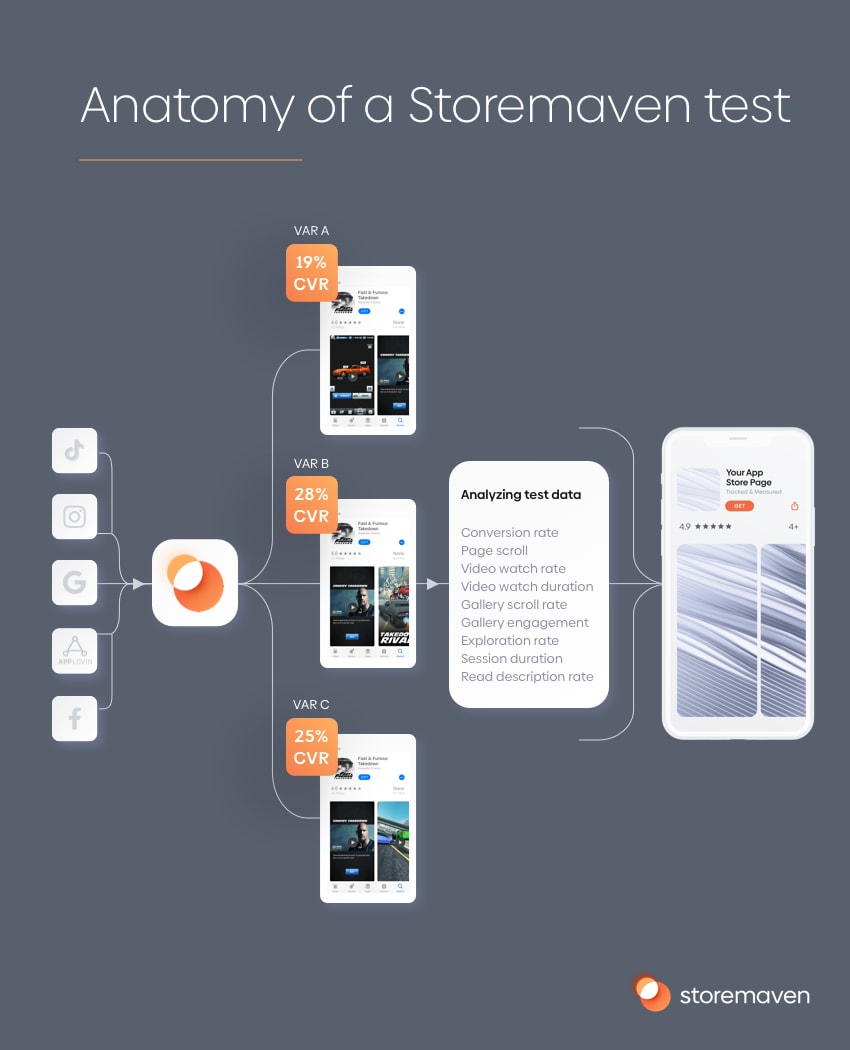

In order to answer these questions, you need to run a test on a platform that provides you with granular data specifically meant to answer them.

The ingredients of a great app icon test:

- Hypothesis: Choose a clear, strong hypothesis you can act upon. Changing an icon background from red to blue isn’t a strong hypothesis. So, let’s say your users like the blue icon better, now what? Test a yellow once? How about a teal one—who doesn’t like teal? What about the hundreds of other colors? How does this test help you to better understand your users?

- Design: Based on the hypothesis you came up with, create the design brief. Think about how these hypotheses are reflected in your creative assets and start designing different variations of your icon.

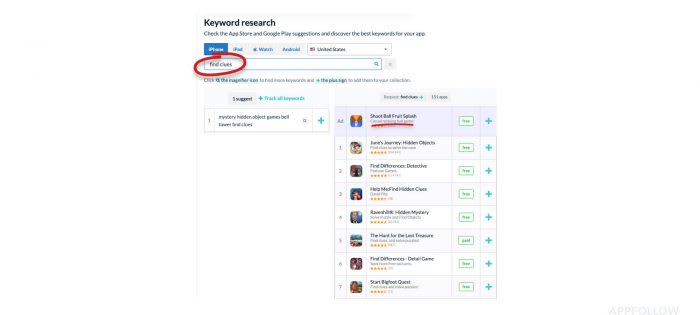

- Traffic Strategy: A test is as successful as the traffic you are directing to be part of this test. Knowing your audience and understanding exactly who to target (based on the hypotheses you came with) is crucial for a test’s success. StoreMaven can help here, as can the right insights to better define and segment your audience.

- Run a test: Setup ASO tests for the App Store and Google Play pages using a testing platform such as StoreMaven by creating replicated versions of these pages and sending live traffic by using banners on Facebook / Instagram / Adwords or other digital channels.

- Analyze results: When your traffic strategy is in place and the test has been run, it’s time to carefully analyze the results.

- Start over: It doesn’t end there. Now you are equipped to come up with new hypotheses based on the results and insights from your test and run more tests to help improve your CVR. We constantly strive to improve, don’t we?

Tip: Icons are only a part of your creative ASO strategy.

Remember—your icon is not the only test-worthy app store asset. On average, elements such as your video or screenshots can yield higher returns—in some cases up to 40% higher CVR.

At the end of the day, an icon test is a great place to start, and the results can give you unique insights into your users and the best way to showcase your brand through your app store creative assets. But when you’re designing icons, it’s equally important to consider the other creative assets at your disposal, such as screenshots or videos, so you can create a long-term ASO strategy that incorporates all of these visual elements. This is what can truly set you apart and positively impact the return on investment of your mobile app marketing efforts.

Two steps to maximize your app icon test

Upon analyzing successful Icon tests, we’ve found that there are two specific factors consistent in each of them.

1) Develop strong hypotheses

It’s imperative that your hypotheses are precise and framed in a way that will advance your understanding of your app store visitors. It’s not enough just to change the background color or test different characters against each other.

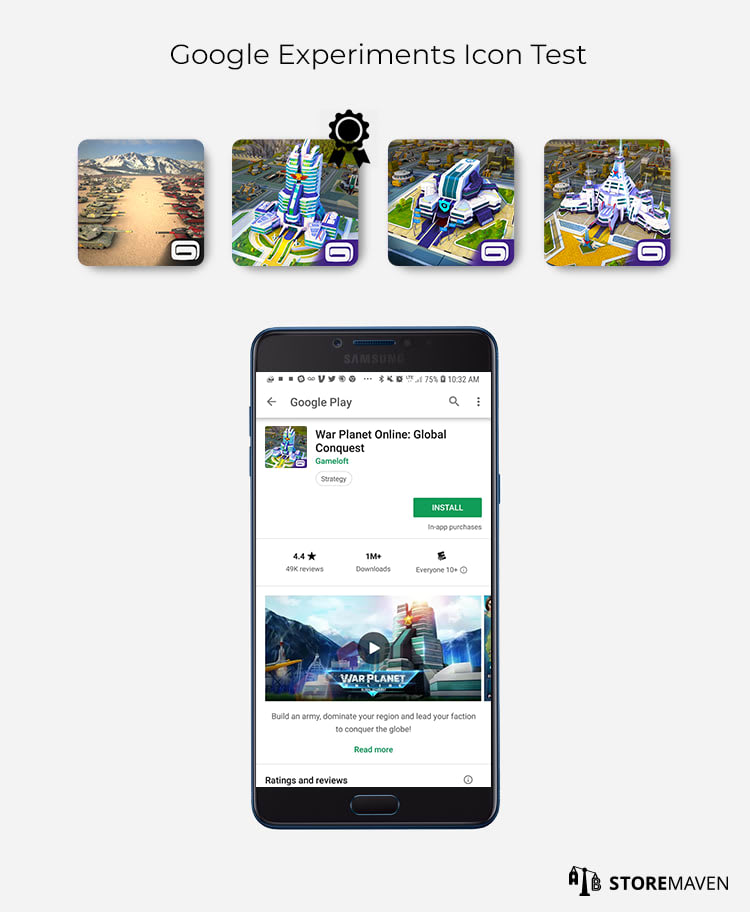

In the Google Experiments test above, Gameloft creatively tested distinct combinations of both character placement and gameplay elements. Each of the variations is different enough in their messaging and design to isolate the reason why a specific Icon won and to provide a foundation that can guide the next test. In this case, the winning Icon shows that visitors don’t respond well to a character in the Icon, implying that gameplay mechanisms are the main appeal over environmental and storytelling aspects.

2) Know which metrics matter

Once you’ve implemented strong hypotheses, you’ll need to know how to effectively measure the test results. As we mentioned previously, Icon testing is never fully pure since you can’t change and test your Icon in every step of the funnel. The insights you can receive, though, are significant enough to create actionable next-steps.

In addition to analyzing your iTunes Connect data and the Google Developer Console (despite its limitations), you should be monitoring:

App store engagement data

One of the most important metrics to use when measuring the impact of your ASO efforts is app store engagement data. This information is key to understanding your different audiences and identifying exactly which messages and creatives drive their actions.

Plus, it’s important to be aware that your app icon functions in parallel with other app store assets; it shouldn’t be looked at alone. For example, in cases where the Icon matches exactly what’s included in the Video thumbnail or Screenshots, the repetition could potentially harm CVR and lead to dropped visitors. In other cases, your Icon may clash with your Gallery and discourage visitors from exploring the rest of your product page.

Monitoring app store engagement uncovers the nuances of visitor behavior and reveals how different creatives impact their decisions. The insights you find here enable you to create a more holistic ASO strategy that takes into account the combined effect that app store assets have on engagement and conversion.

Impact on different traffic sources

Once a test is complete, you should be checking:

- Overall CVR

- Browse CVR (conversion of users who discover your app through top charts, featured app pages, or navigation tabs)

- Search CVR (conversion of users who discover your page by directly searching for your brand, or by searching relevant keywords and seeing your app in the Search Results Page)

- Referral CVR (conversion of users who arrive from paid campaigns through sources such as Facebook, Google, or network traffic)

- Click-through-rate (CTR) of search and organic traffic

- Impression to install (ITI) of paid referral traffic

These metrics also provide insight into the possible correlation between different traffic sources. For example, if your Icon worked well with paid traffic and had positive CTR and CVR impact on search, then you know for the next test that search traffic behaves similarly to UA traffic when it comes to Icon preferences. If you understand how the funnel can be impacted, it’s not as much of a gamble in the next test, which means you don’t have to worry about damaging search CVR while conducting subsequent tests.

Re-engagement data

Depending on your company’s KPI’s, looking at re-engagement data (e.g., open rate, number of sign-ups or registrations, in-app purchases, etcetera) will also give you a deeper understanding of the impact your Icon has on the bottom of the funnel.

The app icon testing mistakes to avoid

After tracking thousands of Icon tests that leading mobile publishers have run on Google Experiments, we found that on average, only 20% of tests actually succeed in finding an Icon that converts better than the control. In fact, out of all possible elements to A/B test, Icon tests fail the most.

Why?

1) Lack of clear hypotheses

One of the major reasons most Icon tests fail is related to hypotheses, or lack thereof.

Hypotheses in the context of app store testing are precise statements that can be proven or disproven and should be used as a starting point for further investigation. They drive the creative design and direction of the test so it leads to actionable results. However, people tend to think of Icon A/B tests the way they think of web and landing page A/B tests—where you can change a single button color and suddenly see an uptick in clicks.

It’s important to understand that mobile is not like that both due to the nature of the platforms and by the unique challenges of app store testing in general (e.g., 100% users are sent to the same place, every app store page has the same layout, visitors engage in different ways, etcetera). In general, when developers or mobile marketers run their own Icon tests, they usually test elements that don’t actually create an impact.

For example…

Weak Hypothesis

- Visitors prefer to see an Icon with a blue background instead of a red background.

The issue with this hypothesis is that either users won’t notice the changes or the hypothesis itself won’t lead to significant learnings about your app store visitors. So, users prefer a red background color logo—now what?

When weak hypotheses are used to drive tests, the changes are too subtle to make a significant impact on the performance or CVR of the app store page. The key is to understand how to drive conversion, which means understanding what visitors respond to and what aspects of the app or game are most appealing to them. It’s important to develop a long-term strategy rather than continuously running multiple, unrelated Icon tests that don’t lead to valuable insights.

It’s also possible to develop hypotheses that don’t actually lead to any beneficial understandings. For example, while the Icon test example above is a vast improvement to simply changing the background color, we still don’t consider it to be as effective as it could be. The developer uses the same character (and same facial expression) in similar positions with slight adjustments to the weapons he holds. The design directions of each Icon are too similar to generate useful results even though a “winning” variation was found.

Now let’s see a hypothesis from the other end of the spectrum…

Strong Hypothesis

- Gamers to my app need to build an emotional connection to a character before delving into the specifics of gameplay

This hypothesis, on the other hand, allows you to dig deeper into why a certain Icon won and helps you identify the main selling point of your game (e.g., characters or specific gameplay items) that will drive the most conversion. You can also use these insights when designing your other app store creatives.

Above is an example of a strong hypothesis being put to test. Typically only testing characters against each other won’t lead to meaningful results, but the key is that they’re testing characters with strong brand recognition. This is a powerful test to conduct in order to determine which of these widely known characters potential installers respond the best to and will convince them to install the game (in this case, it’s The Flash).

2) Relying solely on Google Experiments results

One of the significant drawbacks of Google Experiments is that you don’t receive data on visitor behavior or engagement. This means you’re blind to the subtle positive or negative impact that Icon changes could have on visitors’ interaction with your page and their decision to install. This also means you lack insights that should be used to form the foundation and hypotheses of subsequent tests.

For example, in the Google Experiment test above, the developer tested a variety of Icons that showcased different gameplay environments. Although a winning variation was found, meaning that one Icon variation began to receive 100% of traffic, it’s hard to pinpoint what insight can be pulled from the test.

Why did this Icon perform better? The winning Icon looks similar to the Video thumbnail—did it win because it caused more people to watch the Video? Is there a correlation between the Icon and Video plays? If your Icon is increasing Video views and then you change your Video or Poster Frame (Video thumbnail), how will that impact your overall conversion? If you’re unable to answer these questions, you could potentially (and unintentionally) damage CVR. For instance, if the Icon worked because of the correlation with the Video, your upcoming Video release may harm CVR significantly, even if it’s a better Video on its own.

Overall, you need more information on actionable items you can implement post-test, not just an intellectual exercise that gives you a shallow understanding of what Icon had the highest conversion.

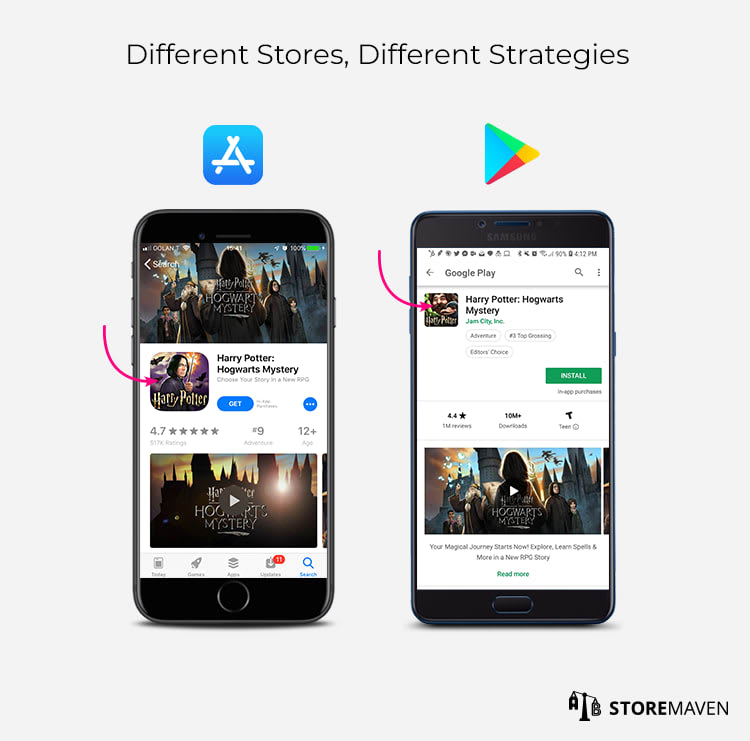

3) Using the same creatives for each app store

Through testing, you will also gain valuable insight into the differences between Google Play and the App Store. A major issue is that many developers assume the Icons they test in Google Experiments can also be applied to iOS and have similar results. This couldn’t be further from the truth.

For example, in some of our tests with leading game developers, gameplay-focused icons led to higher CVR on Google Play, while character-focused icons performed better on iOS. In this case, CVR would’ve been harmed had the developer pushed the same Icon to both platforms.

Overall, the App Store and Google Play Stores are fundamentally different platforms, and they should be treated as such in the area of ASO. This is because:

- The overall design of the stores are still not the same (e.g., no autoplay feature on Google Play videos, image resolutions are different, etcetera)

- Developers often drive different traffic to each store (i.e., different sources, campaigns, and ad banners)

- Different apps are popular in each platform so competition varies

- The user base for Google is not the same as iOS—user mindsets and preferences are different.

Testing your app icon: Why do icon tests fail 80% of the time?

Given the icon’s importance and effect on conversion and retention, you want to ensure you’re displaying one that gives your app the best chance to maximize growth and drive conversions. App store testing is one of the most effective, data-driven ways of doing this.

Since icons appear in a variety of areas, it’s not possible to test them cohesively throughout the different funnels in which users are exposed to them. However, this downside doesn’t eliminate the benefits of testing—and improving—the likelihood of success through a better icon.

After tracking thousands of icon tests that leading mobile publishers have run on Google Experiments, we found that, on average, about 20% of tests actually succeed in finding an icon that converts better than the control. In fact, out of all the possible elements to A/B test, icon tests fail the most.

Why? There are several reasons:

1. Weak hypotheses lead to too subtle differences.

One of the major reasons most icon tests fail is related to hypotheses, or lack thereof. As mentioned above, hypotheses, in app store testing, are precise statements that can be proven or disproven and should be used as a starting point for further investigation. These are what drive the creative design and direction of the test and they lead to actionable results. However, people tend to think of A/B testing in general—no matter what you’re testing and on which platform—as a one and done trick, as though changing a single button color will bring a boost in the number of clicks. A/B testing is not a ‘grow hack’ to achieve quick wins. That’s even more true for mobile apps due to the nature of the platforms and the unique challenges of app store testing in general (e.g., 100% of users are sent to the same place, every app store page has the same layout, visitors engage in different ways, the fast rate users make decisions, etc.).

Here’s a clear example of a strong hypothesis:

The key is to understand how to drive conversion, which means understanding how visitors respond to different creative assets and marketing messages and what aspects of the app are most appealing to them. It’s important to develop a long-term strategy rather than continuously running multiple, unrelated icon tests that don’t lead to valuable insights.

Good hypotheses are precise and framed in a way that, once tested, will advance your understanding of your app store visitors.

2. Siloed brand teams hold the keys to the icon and, consequently, hurt progress.

For this process to work, teams mustn’t work in silos and should strive to join forces and share their progress constantly. Every so often we encounter teams that generate some great results from our tests but, based on these results, face distressing challenges trying to convince the company to take a clear new direction. Remember this common mistake before you start the process and even more so when going through a rebranding phase: you need your brand team by your side, understanding the impact and benefits of rebranding and willing to change on the fly if necessary.

3. The icon is part of the larger story.

Another important thing to consider is that the icon is just another part of the story, but it has a major part. It needs to have a clear role within your narrative, and it’s vital that it blends, matches, and works inherently with other elements. A good example of neglecting this is having a strong character on the icon that isn’t portrayed on any other element.

Why you shouldn’t stop at icon tests

There’s no denying that Icon testing should be a critical part of your ASO strategy. Since an optimized Icon can have such a positive effect on your installs, it’s easy to assume that a non-optimized one can potentially harm conversion by the same amount it can benefit you.

However, your Icon is not the only test-worthy app store creative. On average, elements such as your Video or Screenshots can yield higher returns—in some cases, up to 40% higher CVR.

Based on our analyses of over 500M users, we’ve identified the most impactful app store product page marketing assets by CVR lift:

At the end of the day, an Icon test is a great place to start, and the results can give you unique insights into your users and the best way to showcase your brand through your app store creatives.

But when you’re designing Icons, it’s equally as important to look at the other assets at your disposal, such as Screenshots or Video, so you can create a long-term ASO strategy that incorporates all of these visual elements. This is what can truly set you apart and positively impact the return on investment (ROI) of your mobile app marketing efforts.

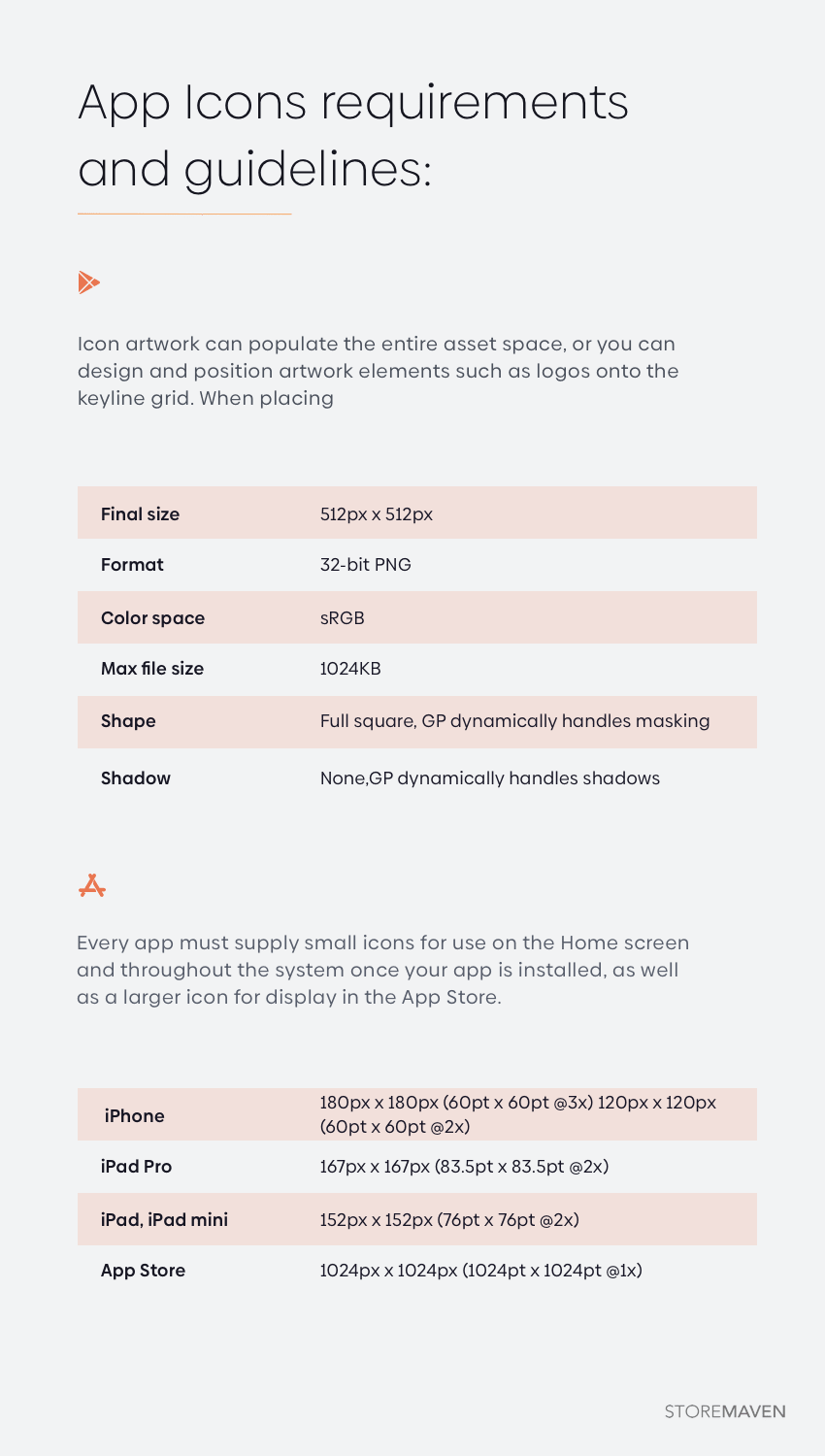

App icon design requirements

Here you will find the guidelines for app icon designs for each store:

We’ve created full app icon design requirements for each app store. Read more about Google Play icon guidelines and iOS app icon requirements.

To summarize

This article gives you a good framework to start thinking about and designing your icons: which category to choose, what principles to follow, and the many blunders to avoid. We also discussed the importance of app icon testing as well as how crucial it is to pick the right hypotheses and the right ways to do it.

But there are many more aspects on your way to conversion rates supremacy: you’ll need to master the lot to enjoy a superior CVR.

So, if you’re now asking yourself about choosing the right title for your product page, check our academy for more articles that will help you become an ASO wizard.