In the app store ecosystem, User Acquisition (UA) and App Store Optimization (ASO) teams always have one goal in mind: mobile growth. And this means the constant acquisition of new quality users. To do that you have to understand what marketing and UA efforts cause quality growth and which levers to pull. And herein lies the major challenge.

Measuring installs should be easy. It should be one concrete number. Alas, nothing is that simple when it comes to the black box of the app stores. UA and ASO teams need to measure and report on their respective achievements and core KPIs and need a finite install number in order to do so. ASO teams concern themselves with discoverability in the app stores and organic performance whilst UA teams are mostly concerned with paid channels and return on ad spend (ROAS). Therefore, install metrics (and their respective organic/paid breakdowns) are vital but one needs to make sure it’s the right install metric, the metric that makes sense for their needs. They are not the same, and they shouldn’t be.

Let’s look at the Apple App Store specifically to break it down

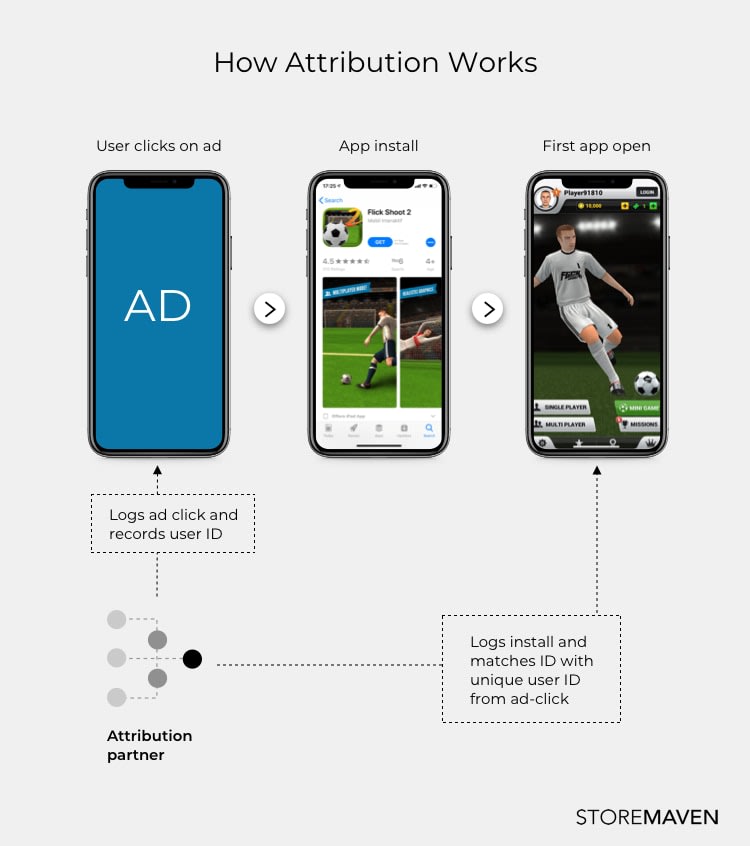

On the web, a lead can be tracked and traced throughout the entire funnel. When it comes to the app stores though, Google and Apple prohibit tracking of the user journey. You can track leads until they get to the app stores and then poof, nothing. That nothing created an entire industry of attribution companies that seek to solve the paid UA attribution problem.

Now UA and ASO teams want to go further than just a sum total of installs and want to be able to connect those installs to marketing and UA efforts in order to track success and monitor the viability of these activities. Correctly attributing a converted user to its source is what allows UA and ASO teams to measure and report on their successes.

That’s where attribution companies step in. They solve the paid attribution challenge using fingerprinting technology and unique device identifiers to track and match users that were directed to the app stores from a specific ad.

While attribution vendors definitely achieved a major breakthrough by using this technology, there are several problems that make it hard to truly measure installs, attribute conversions and distinguish between organic and paid installs. Attribution platforms can only measure and attribute a user once they have installed and opened the app, meaning all installs where the user came in through UA but didn’t open the app will be labelled as organic. But organic in this context is just a catchall for ‘can not be attributed’ and isn’t entirely accurate. To truly understand what drives organic installs you need to understand what obstacles exist in the attribution pipeline and general strategies. We’ll take a closer look at the three most common challenges come from focusing on the wrong metrics, misattribution, and cannibalization. Because after all, acknowledging the problem is the first step in finding a solution.

- The ‘Install KPI’You need to make sure you’re looking at the right metric depending on the right context and the decision you want to make. There are two install KPIs (app units and attribution-counted installs) and you need to look at both of them, holistically. By focusing solely on installs, you can miss other ASO successes in the app stores and other opportunities to further drive organic installs. For example, a paid campaign might have a low ROAS and is not meeting the UA targets because low-quality users are downloading the app but not actually using it. So they are not showing up as successful attribution installs. But these downloads are still counted as app units and the increase in app units has potential knock-on effects in the app store causing climbs in rankings and a subsequent significant increase in organic installs. The unsuccessful UA campaign is actually hugely successful from an ASO perspective and should not be closed. It’s imperative that your teams talk to each other so these cross-silo successes are seen and valued and included in KPI successes.

- Misattributed Organic UsersAnytime a user sees and clicks on an ad they can be attributed (via the complicated attribution systems) to this ad. But what if a user merely sees an ad?

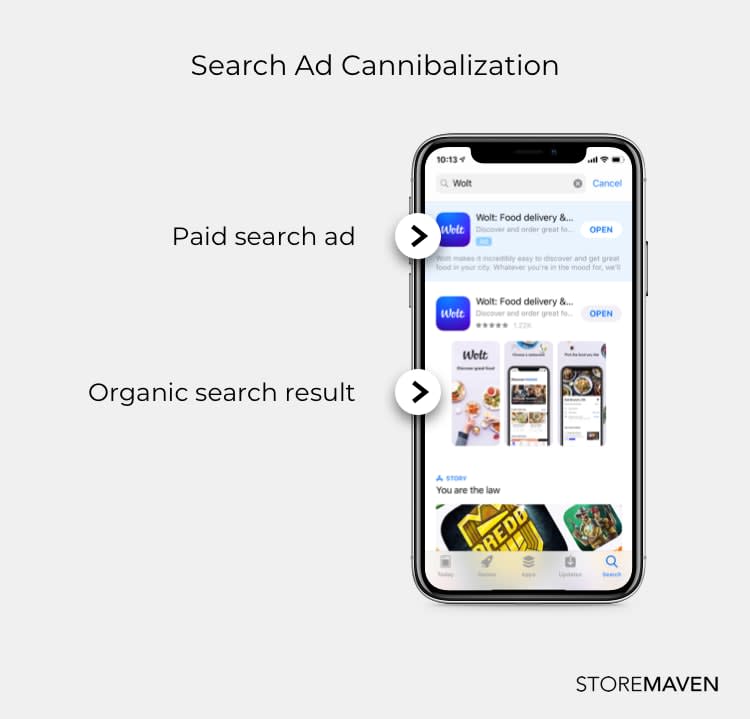

There are often many online campaigns where a user is shown an ad promoting an app (such as within Youtube videos, or postings by influencers). While users that click on the ads can be tracked through click-through attribution, any users that merely saw the ad here, and then independently go into the store to search for the app can, depending on the ad platform, be tracked with view-through attribution. But what about users who cannot be tracked online? What about offline campaigns? It’s been the bane of marketing teams since long before the internet was even a thought. Any offline marketing campaigns, such as promotions at major events, TV ads, or billboards, build brand recognition and interest in an app. These encounters cannot be tracked. If a user decides to then go and search for, and subsequently install, the app based off of messaging they saw in these campaigns, the install will be reported as entirely ‘organic’ even though it should be attributed to the specific ad campaign they were exposed to. - Search Ad CannibalizationThis problem arises when you start paying for installs that you could have gotten for free, specifically around branded search. It happens when a user searches for a branded keyword and they are presented with results as below:

The likelihood of users clicking on the top option is high (and is why rankings are so important across the app stores). Branded search users are high intent users and know what they are looking for. They would install from anywhere but because they clicked on the top result (the search ad) you have now paid for a user that would otherwise have been free. Thus cannibalizing your search ad spend, costing you money and further distorting install attribution numbers. It’s important to protect your brand (by bidding on your own brand terms to ensure competitors don’t get those ad placements) and not cannibalizing your organics. It’s about finding your own app’s specific sweet spot.

Yup, measuring mobile growth is complicated

All these obstacles really create a picture of how challenging it is to measure mobile growth. While it is meant to enable you to measure your marketing and UA efforts, accurately attributing installs to marketing efforts is still incredibly messy. The real question then appears to be, “why bother?”

The simple answer is that measuring some of your efforts is better than measuring none of them. Most UA teams use a ‘k’ factor to account for misattributed organics but it’s all still fairly assumptive. As they base their conclusions for future plans on past insights, many UA teams fall into the trap of making decisions and crafting strategy off direct ROAS instead of true ROAS, the potential for mistakes are around every corner.

In order to achieve the ASO goals of increasing organic installs, app units (not installs) is the number that ASO teams need to be mostly concerned with. Any success in keyword optimization and rankings within the stores are calculated according to the app stores’ algorithms. Whilst the black box is real we know that one of the most important variables they use is app units.

Now each team is concerned with their own goals and use the metrics that best help them achieve them, encouraging bias and skewing of data further. Thus in one company, you have one team lauding app units as the best metric and one team lauding attribution-based installs, creating internal confusion potentially resulting in conflicting strategies. The truth lies somewhere in between those two metrics.

Check your blind spots then hit the gas

There is no magic solution that can truly consider and correctly attribute installs to their related efforts but by breaking down the problem you can find useable, actionable ways forward. Start with these first two necessary steps.

Firstly, get your ASO and UA teams talking to each other, be vigilant in removing potential conflicting strategies. Acknowledge the problems of what’s standing in the way of you making the right data-driven decisions.

Secondly, create a better framework for arriving at data-driven decisions of where to focus your resources (both time and budget). Make sure you’re using the right metrics for the right scenarios. Continue to monitor your attribution platforms and stay on top of the latest innovations coming out of the attribution sphere.

These current challenges might not have easy solves, but better monitoring can help to at least improve and support a better system in the future. Now that you’ve identified some of the blind spots and challenges that abound in measuring mobile growth the next challenge we all face is how to make sure we’re collecting the right data, to begin with, and carefully monitor it over time.

Our next installment in the measurement series will dive deeper into this issue, so more on that another time.