We’re constantly driven to find out more about the needs of our customers and how we can best learn from those already succeeding in the ASO space.

We believe in learning from doing.

We believe in learning from the best.

So what better way than to learn than to hear straight from those in the know who practice every day?

And so we did.

When it comes to getting inside the heads of how ASO managers and mobile growth teams think, the simplest solution is often the best: just ask them.

We spoke to Ben Chernick from Jam City to find out exactly what makes him tick, how he tackles his growth goals and what he considers the most vital (and valuable) processes for his team.

Let’s start with the basics: what is your role at Jam City and what’s it like?

As Associate ASO Manager at Jam City, I’m blessed to work on some of the most enduring and beloved mobile gaming IPs, games like Cookie Jam, Panda Pop, Harry Potter: Hogwarts Mystery and the upcoming Frozen Adventures game. I recently joined Jam City after working for the ASO vendor YellowHEAD and am enjoying being on the client side.

How do you define ASO and the role it plays in a company like Jam City?

For me, I view ASO as an all-encompassing process. It’s not just keywords or creatives but a holistic view of the company as a whole. It feeds on, and incorporates into, user acquisition, consumer insights, M&A and all sorts of different elements. I appreciate how the roles all seem to feed into one another.

As an ASO manager, what does your day-to-day look like?

It’s a very cross-functional role. I work closely with all the Jam City teams from San Diego all the way down to Bogata and Berlin. I typically start my day by checking in with everybody and specifically with the game teams and creative services to prioritize the projects for the day. I work with the creative teams to come up with A/B testing hypotheses and then I work with our in-house UA team to set up those tests. I am responsible for monitoring and reporting on testing to the rest of the organization and we plan out our next steps from there. Having a truly cross-functional process ensures that each step of the ASO process is extremely strategy-driven and all of our hypotheses are based on real data and our assets are vetted through extensive testing.

Jam City has a diverse suite of games, how do you prioritize which games to invest in at any given time?

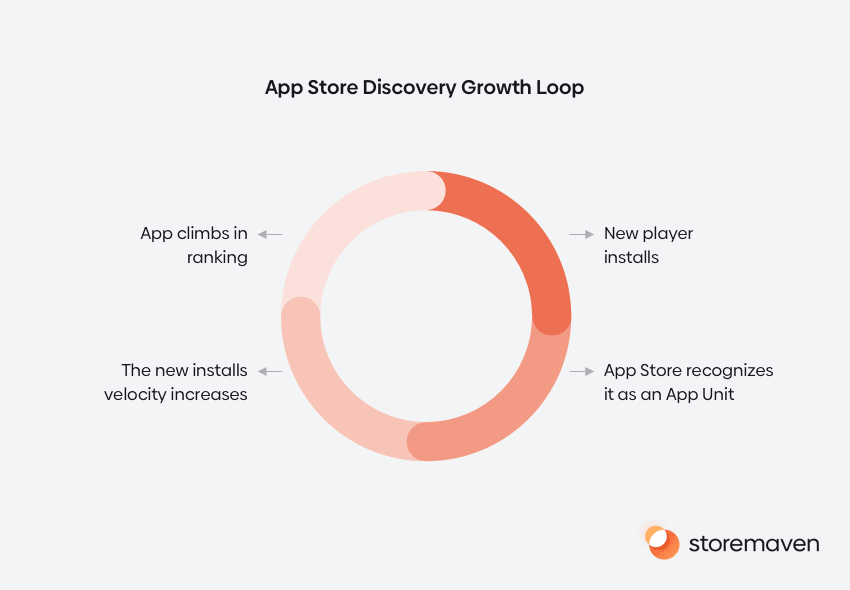

We prioritize our projects based on a few different factors. Generally, we focus on the one that seems the most in need of an update but we also take into account the types of assets that can drive the largest conversion lift. StoreMaven has a really great post that we reference pretty frequently. The assets that are more visible and get more exposure will lead to a higher lift so we take into account how much lift an asset could potentially drive and then we prioritize from there.

Testing provides more value than simply CVR uplift, it allows for an understanding of distinct audiences and user preferences.

Generally, we have a core list of priority titles that we focus on but if there’s one that hasn’t had much testing, we might prioritize that game in order to learn as much as possible about that audience. We want to give all our games attention and we want to know what is specific to each audience. That’s crucial to us.

How do you run the A/B tests?

iOS and Android are different so we run separate tests for both platforms wherever possible. For Android, we use Google Experiments which is built into the Google Play Console and for iOS, we use StoreMaven. Their sandbox testing environment accurately replicates the App Store with representative sample traffic instead of testing in the live ecosystem.

After the tests are completed, how do you analyze them?

It’s important that we wait for a test to have full confidence whenever possible, which for us would mean 90% or higher after collecting a sufficient number of samples so that we can be confident that whatever results we’re seeing are true and repeatable.

After closing a test, we look at a pretty wide number of factors. The first would definitely be conversion. The ideal result would be where we have a higher conversion than the control. But we also look at day-one retention now that Google Experiments measures that and try to find what is the best middle ground between conversion and retention. Ideally, and oftentimes in reality, those two are the same variant.

When we test with StoreMaven we have the opportunity to go in deeper thanks to the kinds of data that StoreMaven is collecting. Each A/B test that we run with StoreMaven can teach us something new and repeatable about the audience and allows us to build each successive round of testing on a growing knowledge base. Take video for example, we break down the viewership and conversion rate second by second so we can see exactly at which point users drop off or at which second users are converting. That granularity gives us really valuable and important data. Not just for the video strategy but for other creatives as well. We can incorporate those key conversion moments into screenshots, into icons, etc. The insights aren’t limited to the video and it doesn’t even have to be limited to that one game necessarily. The same is true with the screenshots. We try to look at which screenshot users convert on as well as the drop rate from one screen to the next. Anything that we can measure in terms of behavior is honestly super helpful because it helps us build a profile of our users and what they do and do not prefer. And we can repeat that in the future.

You must have gleaned a lot of insights over the years, have any of them stood out to you?

We were testing our newest game, Vineyard Valley, relatively recently with StoreMaven and had been doing a lot of Google testing as well. For context, Vineyard Valley is a blast puzzle game that has a decorating meta and a strong story. There are a lot of different elements happening at once and we had to determine what to focus on specifically. We conducted a really extensive testing process during soft launch which is typical for all Jam City titles.

We tested a few different screenshot options and our control set performed incredibly well but as development moved forward the control contained a few elements that became slightly dated. The gameplay was updated. The visual style had been updated. So we attempted to replace those elements in the different variants but because the control resonated so much with our users, it was really difficult to find a high-performing alternative. We were also running tests in Google Play at the time but it was really tough to pinpoint which elements of the control were driving that positive behavior.

We were running a video test with StoreMaven at the same time and, because we can track all of the user behavior there, we were able to gather data on screenshots during that same test.

We went back and looked at both the video and the screenshots to find out which were the moments that successfully converted users and which moments didn’t seem to resonate with users. Users were responding really well to our lead character Simone and that they preferred the scenes in the video and screenshots to look as close as possible to raw gameplay. That might actually sound counterintuitive to anyone who has spent a lot of time making mobile screenshots but that’s what the data showed. We were able to take that insight back to our artists and they came back with a new screenshot set that was based entirely on raw game capture that used Simone in the first two or three screenshots. They also had very simple text containers on the bottom highlighting the different core features of the game. We ran that again through Google Experiments (to keep the environment the same) and not only did that creative set end up beating the control but they actually tested multiple times higher than the control. We were driving more qualified users into the game because we were teaching them more about the game before they actually installed. I think that’s part of the reason that that specific creative set performed so well.

SM: Sometimes the developers or people involved in the process have a really strong opinion about one creative set versus another. The testing process makes it really easy to see what users prefer rather than trying to guess as to how they would then react.

BC: Testing democratizes the process of choosing what to launch within the store.

If testing is so valuable to your team, how does it fit into your overall marketing strategy?

Pre-launch testing is incredibly important for us. We incorporate testing from the very beginning when we have our pre-launch planning phase. Testing for soft launch influences both the assets that we put into the store as well as which geos we plan to soft launch in. We want to make sure that we can maximize the traffic that comes in through the soft launch phase to learn as much as possible about our current and future audience. So we try to launch in geos that have similar creative preferences to the United States and a similar sort of gaming audience in which to study. Essentially, testing is baked into our soft launch philosophy at a core level and that way we start learning right from the very beginning.

How do you get ideas for which hypotheses to test and how to frame them?

We source our ideas for tests in a lot of different ways. The first one would be through market research. We look at the top 100 or so apps in our genre and see what they’re doing. We use inspiration from the market and try to incorporate successful elements whilst staying true to the voice of our titles. We also get ideas from our internal Consumer Insights team. We’re really lucky to have a super robust CI team and that keeps us in touch with what players love and what they want to see more of. Wherever we can we try to represent the elements that users love in the creative. And then finally we use what was or wasn’t successful in previous tests as a jumping-off point for new ideas that build on top of the insights we gathered.

Testing is a sort of a linear process for us where we can plan out hypotheses that build on one another in advance. We test, analyze results, and then build on top of that. You can very clearly trace a line from now back to when we first started this process two or three years ago. For example, if conversion spikes when a certain character appears in a certain asset, we know we should continue using that character in other creatives and in that way test results are a really powerful source of ideas for the future.

SM: Another idea that mimics an in-house CI team is to try to look at the reviews of a specific app or game to see firsthand what other users are saying. It’s another great source for hypotheses.

BC: That the cool thing about ASO, it can scale with you. One of my favorite parts of ASO is being creative and finding alternatives like that.

Have you seen any significant impact after testing?

The type of lift that we expect really depends on many different factors including the genre and the live ops cadence of the game. For casual titles like Cookie Jam and Panda Pop, we could potentially see a lift of 5% and up.

But it also often depends on what you’ve conditioned users to expect. Harry Potter: Hogwarts Mystery is a strong story-driven game where each update brings a new storyline with new characters. Fans have gotten to be really excited about these updates so a new icon or a new piece of creative acts as a signal to them that there is new content. As a result, we can drive really large conversion lifts with a new icon or with a new store preview video. Depending on the event and seasonality, we’ve actually seen day over day increases of up to 100% and higher in conversion. And the great thing about that is that those lifts diffuse for a period of one to several weeks. So we’re able to enjoy substantially higher conversion for several weeks.