The current situation with Product Page Optimization (PPO) A/B testing has become one of the most talked-about topics in ASO expert groups and forums.

“What is this thing? It’s barely usable!”

“How can I actually use this? It doesn’t have 80% of what we need!”

“Since Seinfeld is on Netflix my life is ruined”

On a more serious note, releasing native product page a/b testing capabilities to the App Store was perhaps the most anticipated feature since ASO was invented.

But after we spent a few months figuring out how PPO can be utilized to actually improve conversion rates on the App Store, reliably and consistently, it seems there are some fundamental challenges developers are facing.

In this short note, we are going to go through the challenges, and I hope that would help articulate what exactly is inhibiting your ability to consistently increase App Store conversion rates with PPO. As more pressure is placed on ASO teams to increase conversion rates, as more tools are (allegedly) available, this can also serve you as a note to share internally to help make the case of why PPO isn’t living up to its promise so far.

Why is the current PPO implementation is insufficient to consistently grow App Store CVR?

There are six main points behind the reason PPO is currently hard to adopt successfully.

#1 The inability to analyze results on a GEO basis

One of the key points is the fact that although you can select which countries and localizations your PPO a/b test will run in, you can not analyze the results on a GEO level, which means you can only see the results, be it conversion rates, impressions, downloads, uplift, and confidence on aggregate rendering the test results pretty much meaningless if the localizations in which the test runs have significantly different audiences (which most have).

In Storemaven, we analyzed tens of thousands of tests and the result is clear – different audiences in different countries have different preferences when deciding to download an app/game based on App Store creatives and messaging.

A PPO test ran in multiple countries will not show those different preferences, which would make it impossible to make an educated decision on which product page creatives to apply in which countries.

So the solution is to run a single a/b test for each country right? Well….

#2 The inability to run more than one test concurrently

The current implementation of PPO does not allow for running more than one test concurrently, so if you need to improve conversion rates on the App Store for several different countries and markets, you have to do this sequentially.

As each test takes time (and you’ll see more about that in point number 6) this means that running tests for several markets would take many long months at best, if not years (not even mentioning the need to run follow-up tests in each country to double-down on opportunities uncovered by the first test).

#3 A new version release immediately ends a running test

Making things even more complex, each time your team submits a new version of the app to the App Store, be it a bug fix or a normal version update, your PPO tests end immediately, without any connection to whether the test really ended and reached confidence.

Many teams have a two-week update schedule and some even have 7-day cycles. When you take bug-fix versions into account that are submitted from time to time as well, that makes it very hard to actually have enough time to run a test, and actually have the confidence that you’ll have the necessary time to conclude it properly.

Let’s face reality, the product team that needs to push a new version to the App Store will almost always get preference over a PPO a/b test.

#4 Clunky icon testing capabilities

If you wish to test and optimize your conversion rates through app icon a/b testing, you’re in for a ride.

First, you need to include the icons you want to test in the binary of the app in your next app version submission. Then, if a certain variation besides the control variation performs better and you want to hit ‘apply’, you’re about to be surprised.

What will actually happen is that all of the other creatives (besides the icon) of the winning variation you chose to apply will, in fact, be applied and become your default product page. Besides the icon.

Wait, what?

Let’s go over it again. The icon is an element you specify in the binary of the app version as the “default icon” in order for users to actually see it on their home screen when they finish downloading the app.

As you chose to apply a version where the winning icon was not specified in the binary of the app version as the default app (but as an alternative icon for testing purposes), hitting ‘apply’ won’t change that.

In order to actually implement the winning icon, you need to submit yet another app version with the new icon specified as the default one in the binary.

So two app version submissions and a PPO a/b test in between, means, in reality, it’ll take you many weeks to properly test an icon, if you can actually pull it off and plan for sufficient testing time between releases (see point 3). We recommend downloading the guide below to see how to harness PPO with Storemaven’s solution.

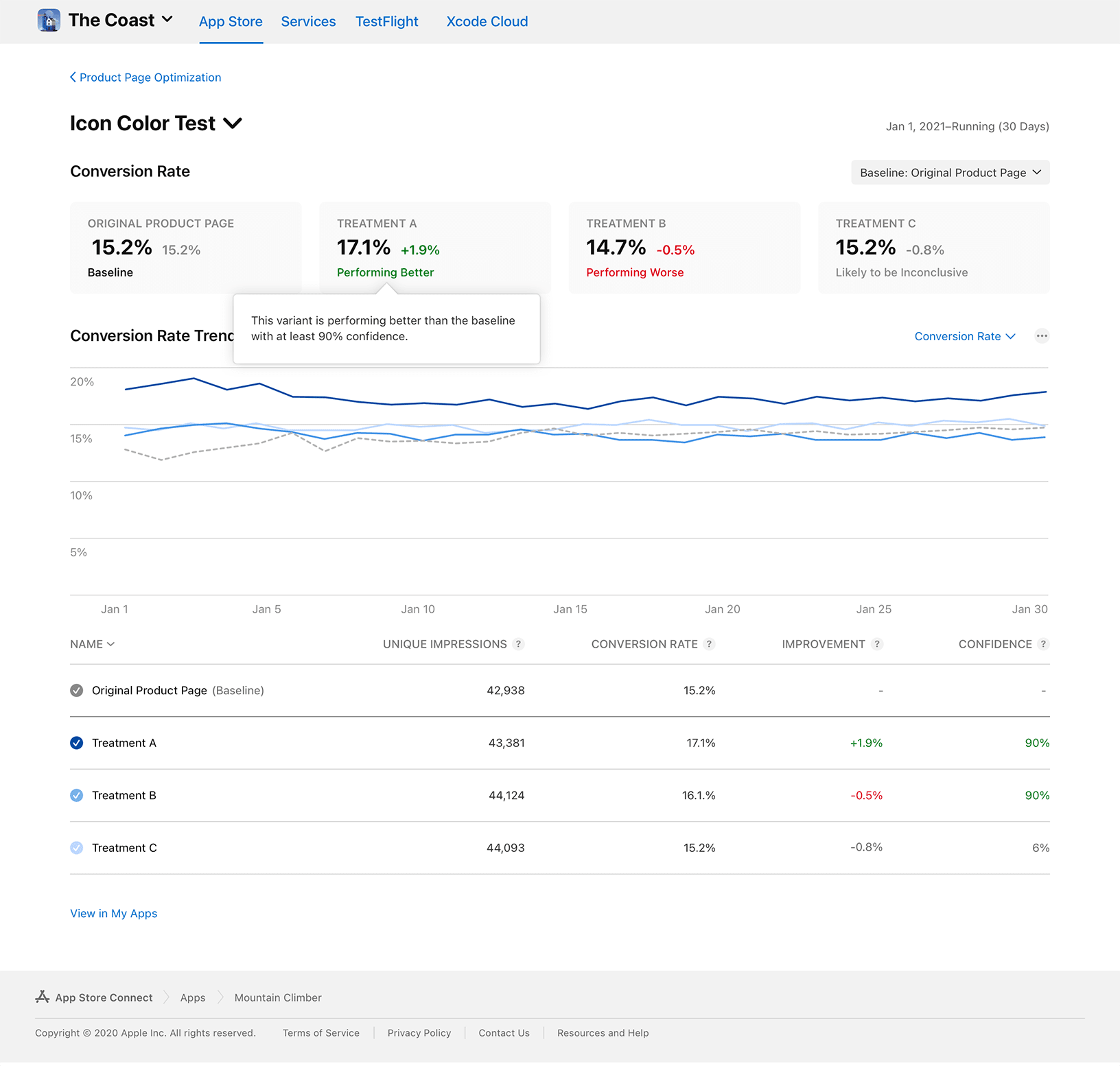

#5 The unclear test data

All results are based on a metric called “estimated CVR” – we don’t have any data on what this means, and the only raw numbers we have are a rounded-up total impression count.

This means that you can’t analyze the actual raw data to understand which variation performed best and you need to count on data that can’t be verified.

#6 PPO requires an extremely large sample size

Tests that don’t quickly rack up hundreds of thousands (even millions) of impressions and up with extraordinarily low confidence levels that don’t allow you to make any conclusions about the test itself.

This is due to the selected statistical model and its current implementation within PPO a/b tests, and this is demonstrated through multiple reports in various ASO discussion groups about tests with extremely low confidence levels even after running them for weeks.

This makes the average test result an insufficient tool to actually make a decision about which creatives will convert more of your desired audience. In some cases, tests reached only 1%-5% confidence, which actually means that in the majority of cases applying the winner will result in a different impact than the one that you’re expecting. If it’s a negative impact, your ‘apply’ decision can lead to disastrous results and losing tens of thousands of potential downloads as a result.

So, what to do about all of this?

- Plan smartly around your version release schedule – Make sure you have assets prepared prior to release so you can start immediately post release, and ensure there is no release planned for the next 2-3 weeks.

- Avoid testing icons – The development work, as well as the inability to know what type of users are in the test makes icon testing extremely challenging at this point in time (eg – browse users who see your icon will probably convert at a low rate as that’s the nature of browse users. If one treatment has a higher % browse the test will be inherently biased).

- Be careful when implementing results – given that many tests fail to reach a sufficiently high confidence level, be very careful when deciding whether to apply results or not.

- Gain more confidence by leveraging replicated store testing + CPP testing – in order to get more confidence behind your decision to apply new App Store creatives and be assured you will not demolish your conversion rates, a savvy marketer such as yourself should take into account more data points.

- Replicated App Store Testing – you can run a test on a replicated product page in a sandbox environment such as Storemaven, quickly, without any limitations, and actually design the test to include only traffic sources that represent the audience you wish to improve conversion rates for. This will add a thick layer of confidence to your decision and you can back it up easily (imagine a mad boss “why did our App Store conversion rates tanked? What did you do?!?”)

- Native Custom Product Page Testing – with Storemaven’s CPP testing technology you can run a native test using two or more CPPs and leverage Storemaven’s powerful statistical engine, StoreIQ, to conclude test with confidence. Supplementing your decisions with CPP a/b tests can help you make the right one.

In conclusion

PPO tests in their current implementation are far from a full, sufficient solution for savvy mobile marketing and ASO teams. In order to overcome these issues, you have to tap into additional tools that are in your toolbelts such as replicated App Store testing and native Custom Product Page testing.

We truly hope that the PPO functionality will improve over time, but until then I hope this note will help you and your team to move forward in the best possible way.

Contributor: Esther Shatz