After analyzing thousands of app store creative tests that leading mobile publishers have conducted on our platform, we’ve identified what makes companies successful in their app store optimization (ASO) strategies, and why many others fail.

With over 5 million apps available across both the Apple App Store and Google Play Store, it’s no surprise that high saturation and increased competition in the mobile app marketplace has made it challenging for developers to differentiate their app from competitors. In an effort to address that problem, developers spend a significant amount of money driving traffic to the app stores, just to discover that a majority of visitors don’t install. Plus, with more of the marketing budget being poured into mobile than ever before, the cost of acquiring new installs has soared.

The biggest barrier to success is reducing these costs, and that is where ASO comes in. App store testing enables developers to boost organic growth, cut user acquisition (UA) costs and get a better understanding of their audiences. It has since proven to be an effective data-driven method to greatly increase the conversion rates (CVR) of mobile apps while decreasing cost per install (CPI).

But, how can you turn creative testing into a sustainable growth strategy that also provides meaningful insights about your audiences?

What we’ve found is that companies using app store engagement data to inform their hypotheses and understand their audiences achieve a significant competitive edge and generate long-term success. Companies that use insufficient data (or no data at all) fail to create a sustainable strategy due to their lack of visitor behavior insights. With this in mind, we recently updated our dashboard to help you more effectively extract these important insights about your users.

This post will explain why analyzing app store engagement is a crucial aspect of long-term growth strategies and how you can leverage this information in your own ASO efforts and throughout additional areas of your install funnel.

How to Leverage App Store Testing Platforms to Understand Your Users

“We’re not satisfied by merely finding winning app store creatives unless we can truly explain why the winning variation is better.” – Noga Laron, Director of UA, Playstudios

As a growth leader, one should invest in finding and understanding channels that can provide sustainable growth. For ASO to become a strategy and not just a one-trick-pony, it’s imperative to understand your audiences and identify exactly which messages and creatives drive their actions. As Noga Laron, the Director of UA at Playstudios, so aptly puts it in the quote above, it’s not enough just to determine which creatives work. The key to the success of Playstudios’ top grossing casino app, POP! Slots, is that they leverage engagement insights to understand the reason one variation converts better than another.

As you begin to test, app store testing platforms and engagement data will help you answer these valuable questions so you better understand your audiences:

- Among different target audiences, how do their preferences vary?

- How many visitors dropped after seeing the First Impression? How many people immediately installed?

- How many people explored the app store page to see what else was available? How many of these Exploring Visitors browsed through the page but decided to drop?

- Did people watch the Video? Which exact chapters of the Video led to installs, and which led to drops?

- How many visitors scrolled through my Gallery?

- Which Screenshots had the most impact? How many Screenshots did people see before deciding to install or drop?

Each app store creative has the potential to increase or decrease the level of intent your visitors initially come to your page with, and by answering the above questions, you get closer to isolating the exact messages that drive visitors to install.

Disclaimer: These results are not based on a real test.

In the sample test above, you can easily extract meaningful insights from the results and determine which messages convinced visitors to install. As you can see in the graph, the third asset caused a spike in conversion among Exploring Visitors, which is an insight that can be used to generate a new hypothesis. For example, how will your CVR be impacted if you move this message further up the Gallery, either in the first few seconds of the Video or used as the second asset instead? Will this specific message be effective in other areas of the install funnel (e.g., within UA ads, app on-boarding screens, etcetera)? Using granular app engagement data like this is key to developing a long-term ASO strategy.

App Store Tests with Insufficient Data

There are alternatives to testing with a platform, such as releasing a new variation to the live store without testing just to see what will happen, or using Google Experiments to determine which creatives to showcase. While doable, these are insufficient in helping you create a sustainable testing strategy that yields consistent results.

The Issue with Google Experiments

With Google Experiments, for example, you can A/B test your app store assets and get a sense of the overall CVR increase or decrease each variation created. However, most of the time you don’t have a clear winner, and in the cases that you do, you still don’t know why a certain variation won. Plus, you won’t know if the CVR improvement was driven by quality users or a segment you’re not even interested in testing. If you want to analyze the true impact your creative changes have on visitor behavior, CVR, and performance, this isn’t an effective tool on its own.

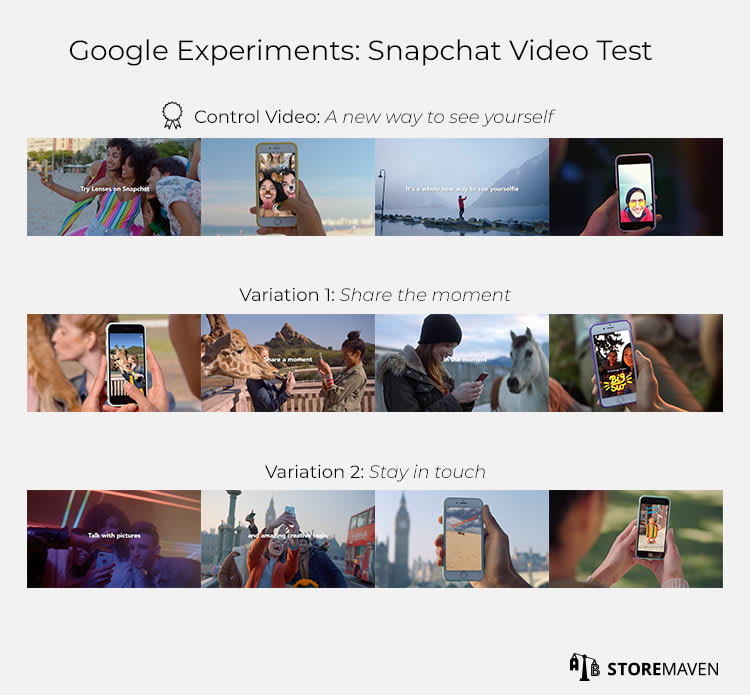

Let’s take a look at an example of a Google Experiment that Snapchat ran…

In the example above, Snapchat tested Videos with different messaging and themes as part of a larger strategy to differentiate itself from competitors in the highly saturated and competitive social app landscape. Each Video highlights a different value proposition in order to determine the most effective way to market Snapchat to potential users. Although a winning variation was found, meaning that single Video began to receive 100% of traffic, it’s hard to pinpoint what insight can be pulled from the test.

Why did this Video perform better? How many visitors watched the Video and then decided to install or drop? Did visitors even watch the full Video? Which exact seconds pushed them to install? Is there a correlation between Video plays and app store page exploration (e.g., scrolling through the full Gallery, reading reviews, etcetera)? What should they test next given the results? Most importantly, did the Video convert the audience that Snapchat was hoping to target?

Without this data, Snapchat will push a Video to the live store without fully understanding its possible implications and without establishing a foundation for a long-term testing strategy. Videos require significant time, effort, and money to produce, and an optimized Video has the potential to boost CVR by 40%. However, it can also have the opposite effect and harm conversion if it’s not successful at marketing your app or game to visitors.

The Google Play Store and the Apple App Store are Different Platforms

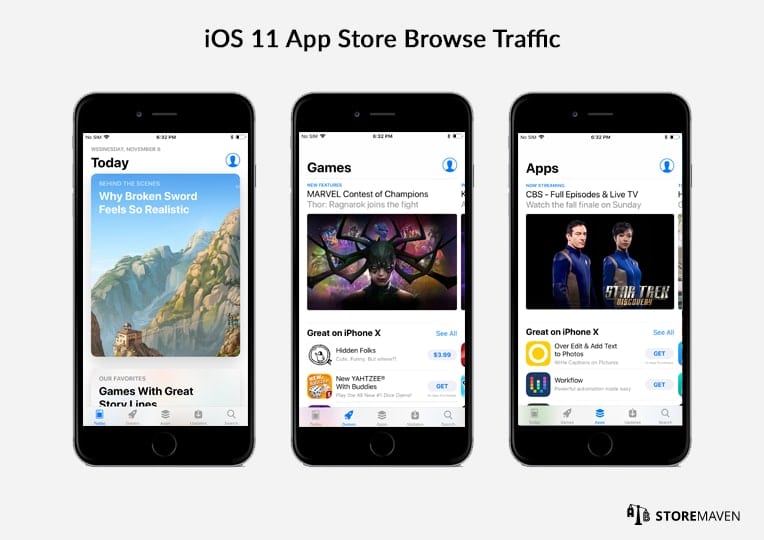

Additionally, many developers assume that the results from their Google Experiments test can also be applied to the Apple App Store. This couldn’t be further from the truth. In fact, we’ve found that using Google Play creatives on the App Store can potentially lead to a 20-30% decrease in installs.

This is because:

- The overall design of the stores are still not the same (e.g., no autoplay feature on Google Play videos, image resolutions are different, etcetera)

- Developers often drive different traffic to each store (i.e., different sources, campaigns, and ad banners)

- Different apps are popular in each platform so competition varies

- The user base for Google is not the same as iOS—user mindsets and preferences are fundamentally different

So, if everything is different, why would you market your app in the same way? Short answer: you shouldn’t.

This isn’t to say that you should completely avoid using Google Experiments. However, if you only rely on tools that facilitate A/B tests but fail to uncover behavior insights, you’ll run into a wall and significantly reduce your chances of succeeding long-term. To achieve and sustain a true competitive advantage, you should be monitoring how people engage with your page, analyzing their different behaviors, and identifying what you can learn from those tests. Overall, using app store engagement data is a way to make your Google Experiments tests more successful.

The Role of App Store Engagement Data in Your Broader Marketing Strategy

In general, mobile marketing is all about understanding your users. Identifying what your target market cares about, what they look for in a product, and how they make their decisions is key to achieving success throughout the various funnel stages.

But as a mobile marketer, you’re faced with a seemingly impossible environment in which to operate. There are significant changes that you must deal with month to month, including:

- The App Store and Google Play Store algorithms

- Evolving consumer tastes and preferences

- Changing competitive landscape in your category

- Overall trends, both in terms of design and messaging

- Real world events and seasonality change

Given that so many things keep changing, the only way to attain consistent success is by adapting and adjusting strategies to the dynamic environment. A significant part of this involves constantly testing and updating assumptions about what works for your visitors and how you can more effectively market your app to them. Winning apps take messaging very seriously, and vigorous testing and iterating of these messages is imperative.

—–

StoreMaven is the leading ASO platform that helps global mobile publishers like Google, Zynga, and Uber test their App Store and Google Play creative assets and understand visitor behavior. If you’re interested in using app store engagement data to build a sustainable growth strategy, schedule a demo with us.